Abstraction Layers: In Arithmetic & Elsewhere

Counting to 84 by offshoring much of the real work to your information-technology ASI arabic-numeral symbolic copilot, and other topics about variation in proper education for the kind of...

Counting to 84 by offshoring much of the real work to your information-technology ASI arabic-numeral symbolic copilot, and other topics about variation in proper education for the kind of numeroliteracy worthwhile to help you function in society over the past 5000 years…

Let us try to think, once again, about: the real ASI, we East African Plains Apes of (relatively) little brain, and our ability by drawing on the real ASI to apply the massive mental force multipliers that we call abstraction layers.

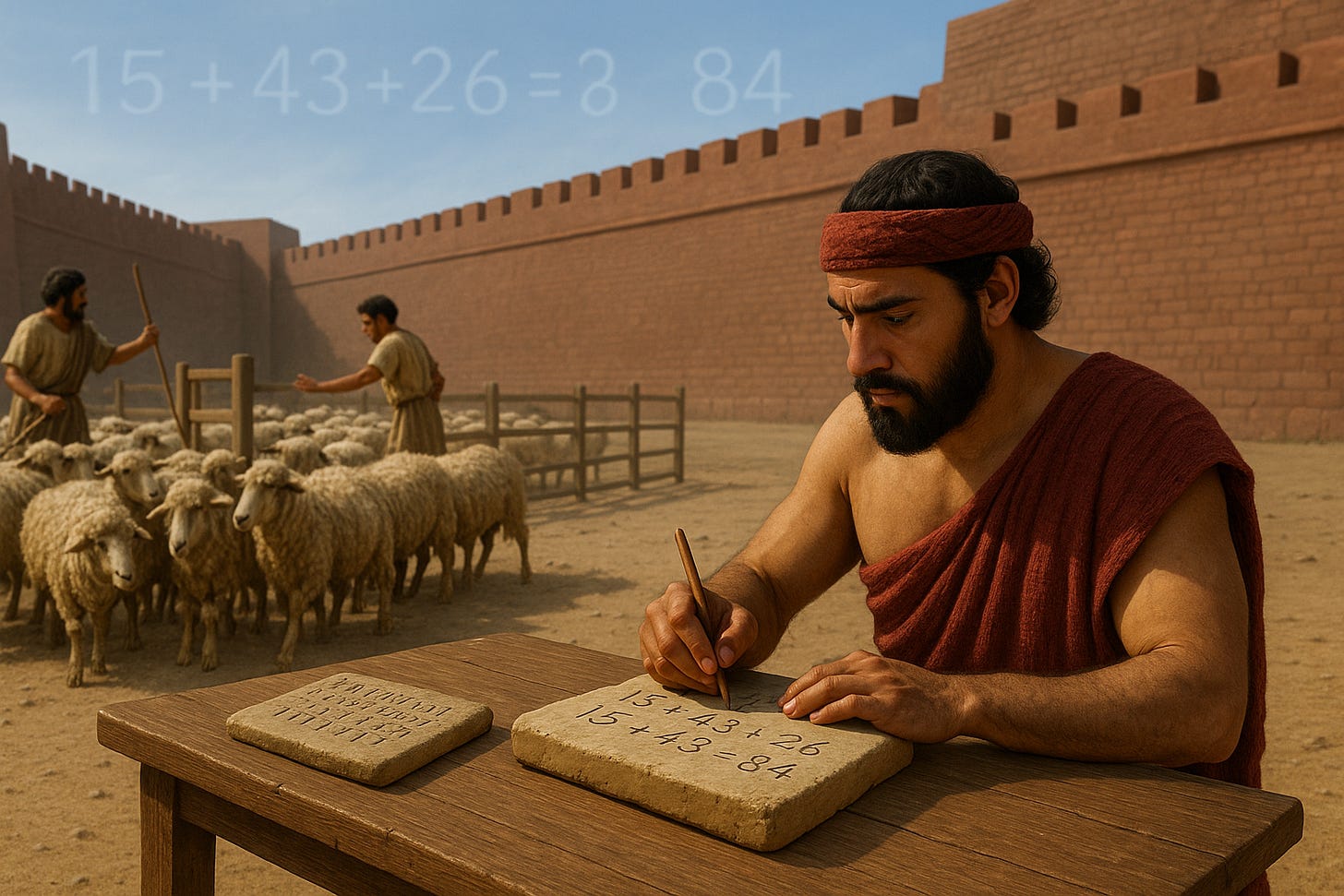

Cast your mind back five thousand years to the days of Gilgamesh, 2/3 god and 1/3 man, King of Uruk-the-Sheepfold. Climb the walls of Uruk, walk their length, survey their foundations, and study the brickwork. Is it not all made of solidly-crafted oven-baked bricks? Did not the Seven Sages lay the cornerstone? Two thousand acres for the city! Two thousand acres for the orchards! Two thousand acres for the pits of brick-clay! And one-thousand acres for the Temple of Ishtar! At peace is the city of Uruk protected by its wall. Prosperous are its people, well-provided with tools of bronze made of tin from Afghanistan and copper from Jordan well-mixed, well-fed on grain and mutton, well-clothed in wool and linen, well-housed by skilled builders.

Imagine that you are one of the understewards of King Gilgamesh, in charge of informing the cooks who are to prepare the feast for the climax of the Great Festival of Ishtar—the one in which Ishtar demonstrates that Gilgamesh is still he upon whom her favor rests. You need to tell the cooks about the sheep that they are going to roast. Fifteen sheep have arrived from the north, forty-three from the west, twenty-six from the south, and there is report that the barbarian of Elam have stolen those that were supposed to come from the east.

How do you figure out what to tell the cooks? You need to count the sheep.

The most straightforward way would be to send a shepherd, a numerate intern, plus a couple of laborers with mobile fence-sections and a gate out to the sheepfold where the sheep are. Have the shepherd drive the sheep into one corner of the sheepfold, have the laborers set up the mobile fence and the gate across the corral, let the shepherd drive the sheep one-by-one through the gate into the other half of the corral, and have the intern count them as they pass, and then report the total number as eighty-four, plus include in his report notations on the individual characteristics of the sheep, where those are thought to be at all likely to be relevant to the jobs of the cooks.

Or—provided you trust the reports of the sheep-drivers, you could use what we call arabic numerals cognitive addition technology, thus:

15 + 43 + 26

= (10 + 5) + (40 + 3) + (20 + 6)

= (10 +40 +20) + (5 + 3 + 6)

= (1 x 10 + 4 x 10 + 2 x 10) + 14

= (1 + 4 + 2) x 10 + (1 x 10) + 4

= (1 + 4 + 2 + 1) x 10 + 4

= 8 x 10 + 4

= 84

The same eighty-four.

No need for shepherd, numerate intern, laborers, mobile fence-sections, gate, and—upstream in the production process—woodcutters and carpenters. Just you at your table with your clay tablet and your stylus. With your stylus and your ability to draw on and utilize the arabic numerals cognitive addition technology that is in the possession of the ASI, the Anthology Super-Intelligence which actually exists and which gives you your meal-ticket by virtue of your ability to interface with it. (Never you mind that we are 4300 years anachronistic here.) OH NOES!!!! JOB DESTRUCTION! (Or, maybe, the woodcutters and carpenters could make other also useful things, the shepherd could perform an extra health check on the flock, the laborers could be put to work storing beer, and the intern could goof off.)

Maybe you are saying “WTF?!?!?!” and are thoroughly confused.

Maybe when you are asked to calculate 15 + 43 + 26 you just say “eighty-four”, nd do not think about why. Odds are—unless you are Johnny von Neumann—you don’t go through all the steps in the indented math above. (In my mind’s eye, when I do the problem I see: 5… 8… 14… push to stack… 1… 5… 7… pull from stack… 8… 4… eighty-four.) But all the steps, and shortcuts through them—the one-digit addition table, “carrying the one”, place-value for multiplying by ten, the commutative and associative properties of addition, and the distribution of multiplication over addition—are needed. All of them are needed to shift from away from having to count all the sheep one-by-one. All of them are needed to shift to manipulating the symbols. All of them are needed to shift to be able to do it much faster, and without intern, shepherd, laborers, woodcutters, and carpenters.

This is an abstraction layer. Arabic-numeral math is an abstraction layer. The lower-level reality is a lot of large, smelly, furry herd mammals. But we hide the complexity and the individuality of the animals and consider them just as standard sheep. And then you further hide complexity so that you are no longer, when you turn to your clay tablet and your stylus, dealing with sheep but merely with numbers. The work-organization and laborer-bossing skills that were needed to actually get the sheep counted back in -3000 are no longer needed once you have moved up an abstraction layer.

There are losses here in dealing with things this way: the cooks will not know that sheep #5 is a “year-old male, without blemish”, for example, while the intern might well have included that in his report. But if the cooks really do need more information than “eighty-four!”, they will tell you so.

Good practice in being an effective and productive front-end in the real Anthology Super-Intelligence of the collective human mind—excuse me, good practice in white-collar work—involves building, utilizing, and working at as high an abstraction layer as possible in order to economize on cognitive effort. Transform problems up the chain layer by layer until the layer almost breaks, in the sense that trying to build and go up another layer will no longer produce answers your clients will find satisfactory to the questions that they will ask.

Moreover, you need to not just know how to do your share of the workload as a node in the ASI at the particular abstraction layer you have chosen to work at, you also need to know a lot about how to go about things at the next-lower abstraction layer, or two. Why? Because sometimes the problem you are considering will hit a bug in your abstraction layer, and it will break, and you will still have to do your job. And most of the time all abstraction layers leak meaning and accuracy and effectiveness, and you need to be able to judge whether those leaks are a problem for you and your clients—and to fix them if they are.

Now let me shift gears.

I was sitting across the dinner table from one of the barons of Silicon Valley. He has been asking recent college graduates he has been running across—well-prepared and talented young people newly employed at Apple, Anthropic, and various other Behemoths and Leviathans of tech—what pieces of their recent undergraduate computer‑science education are now proving useful for them in their jobs.

Their answer: none.

He says they say: The undergraduate computer science curriculum has not kept up.

The dominant vibe was that the right strategy was to get an internship and/or a part-time job starting as a freshman, to treat your CS courses as your side-hustle, and maybe, maybe, to spend your class time taking philosophy, art, and other things you are curious about and that you think would enrich your life.

The conversation then headed off into poetry appreciation. There was a brief side-conversation among some of us elders about how we had never used any of our semiconductor physics knowledge, and did anyone remember whether you needed 10^(-6) or 10^(-4) phosphorous atoms per silicon atom for a MOSFET gate? (It is actually something like 1.5 x 10^(-3).)

But the report that the usefulness of the CS courses was zero—that was something I thought about on the way home. Overstated, as young people do, certainly. But pointing at something: at a mismatch between the abstraction layer the Behemoth and Leviathans wish for their new young hires to work at, and the abstraction layers we are teaching them here at Berkeley.

And now I come to the third part of today’s lesson: my colleague Lakhya Jain, who rants about the students in recent instantiations of his course CS 186: Database Systems:

Lakhya Jain: ChatGPT and the end of learning <https://www.theargumentmag.com/p/chatgpt-and-the-end-of-learning>: ‘The normal patterns of CS 186 were completely disrupted…. Nobody seemed to have any questions or need any help on anything, no matter how complex it was. But people kept getting perfect scores on their coding assignments anyway…. [And] reality… [was] (handwritten) exam grades coming in at an all-time low… 15% below… normal…. Programming is about… think[ing] through the thing you want to do, writ[ing] the code for it, execut[ing] it, watch[ing] it fail, learn[ing] what was wrong, fix[ing] it, and try[ing] again. That forces you to learn the guts of what’s happening from first principles, and the more you do it, the better you become…. I can’t overstate how damaging it is for students to use AI as a means of short-circuiting this process…. My students were… cheating themselves out of vital development as engineers, failing to make the connections that practice and hard work help form…

But when you look at the syllabus for CS 186, my reaction is that it is not so much ChatBots that are to blame. Lakshya Jain appears to be getting caught in several sets of gears:

First, “think[ing] through the thing you want to do, writ[ing] the code for it, execut[ing] it, watch[ing] it fail, learn[ing] what was wrong, fix[ing] it, and try[ing] again…” is a cycle that can and is being done at the copilot-prompt level. The problem is that the problems assigned in CS 186 are too easy for the age of MAMLMs, in the sense that they “know” the answers immediately. Time and thought needs to be invested in problem sets for the age of Copilot ChatBots, and it has not been done.

Moreover, it seems that CS 186 may currently be pitched at the wrong abstraction layer to be a preprofessional CS course for this day and age. At least, alumni and alumnae are not broadcasting back to the university: “Pay attention in CS 186! You will have to use it!” This was really useful is a thing that every preprofessional course teacher should hear, regularly, from his formal students. Are the CS 186 teachers hearing that regularly? It seems that the students are not.

Thus Lakhya Jain has perhaps not thought enough about the abstraction layers at which his students will be working during their careers. But here we run into a problem: we do not know. Computer languages and work practices are always changing—procedures, objects, frameworks, piled on top of one another. It has always seemed to me that CS courses should be revised every two or three years so that they are always using the most currently fashionable frontier framework, and that they should do everything two ways—once with the CFFF, and once at an abstraction layer a notch lower. However, I am not an expert.

But, last, and perhaps most important, there is what Chad Orzel calls the separation between “education” and “credentialling”:

Chad Orzel: The Central Tension of Higher Education <https://chadorzel.substack.com/p/the-central-tension-of-higher-education>: ‘For a wide range of reasons, the link between credentials and the affect of actual education is now completely broken…. That ship has sailed, burned, fallen over, and sunk into the swamp. Students no longer feel obliged to feign an interest in actual education as a prerequisite for getting their credential and their white-collar career, and thus, they don’t. Which leads to grade-grubbing and corner-cutting in subjects with direct and obvious connection to credentials that are perceived as useful, and cratering enrollments in subjects that aren’t.

That cultural shift helps drive a kind of tectonic drift widening the gap between the functions, which in turn increases the ambient angst level in and around higher education. Faculty who want to be doing actual education are confronted with students who blatantly want only credentialing, and vice versa, and everyone starts to spiral. So that’s the mismatch that I think is at the root of everything. I wish I had an elegant and optimistic solution to propose, not least because given the amount of money sloshing around the system, being the Man With The Plan would make me rich beyond dreams of avarice…

Back in the day it was difficult to get the credential without actually getting an education, as doing (some of) the work was not something you could largely avoid.kdl;aelkeeIf I were in charge of UC Berkeley right now, I would suggest the faculty spend this year having a roiling conversation about:

how the societal credentialling function is going to work going forward, and what the rôle of Cal is in giving our students a leg-up on that process.

at what abstraction layers we need to pitch what we teach so that it is of the most use to students who want to be educated men and women—who want to be effective front-end nodes to the real ASI—in order to enrich their lives.

at what abstraction levels we need to pitch what we teach so that it is of the most use to students who merely wish to persuade potential employers that they will be effective interface nodes between their employers’ organization and the ASI in order that said employer will pay them money?

As a counterpoint (acknowledging a probable bias on that direction), I think it's impossible to do that cycle of thinking with the MAMLMs --you can't pick up when it's BSing you or putting you into a path with huge costs later--- without understanding what's going on in the layer below, and that does take, I think, doing the work.

I'd characterize the situation at best as equivalent to statistical packages: to fit a statistical model I often do things like

* Write a `brm(...` call in R

* ... which generates Stan code

* ... which compiles to C

* ... which does MCMC

* ... which...

* ... which hopefully does some inference...

* ... which maaaybe tells me something about the bits of the world I'm thinking about.

Lots of abstraction layers there, and my knowledge of many of them lies somewhere between "very cursory" and "once upon a time." However, I do have some direct knowledge --I've put some time, for related or unrelated reasons, in some of the maths and the code and so on--- which means that I have a sometimes-reasonable idea of what all of this can do, what it can't do, when it's giving me BS (not because of a bug but rather because I'm pushing it to do what it can't).

But I know far too many people whose approach to data analysis is "throw the data at the program, press the FIT MODEL button, make decisions based on a p-value and the sign of a coefficient." Sometimes I do it myself. Thus is furthered the sale of rue.

Extrapolating, I worry that the fact that people who do know the lower layers are having so much success iterating with MAMLMs that they underestimate how much their understanding is necessary for that success. A good programmer can co-code with one of these systems quite well, but I don't know that you can become a good programmer without being able to code without one.

Granted, there are caveats here; you don't need to know assembler to good code in most languages, etc - as Whitehead would say, that's the point of civilization. But MAMLMs don't offer the guarantees a compiler can, or a mathematical process, or even a car's automatic gearbox -- it can go wildly or subtly wrong in ways you can't tell without knowing about things one or two layers below what you're discussing with it. I don't know how to learn that without doing some of the work.

[I have other, unrelated reasons to decry the use of natural language as the primary interface to write and edit code --it reduces the already too-low short-term organizational incentive to building and improving the formalized domain abstractions that end up being one of the main ways in which organizations scale up their thinking; just like the shift from Latin to algebra, the short-term limitations have a cumulative payoff - I would hypothesize that a physicist who never has to learn to think in algebra will have an easier early time but won't go far in his understanding. Same goes for coding.].

Abstraction is built into the brain. For example, once the brain recognizes and characterizes a surface, it drops the details that allowed that recognition. That's why it's so hard to find a small item one has dropped on the floor. One is sure that one has looked at the entire floor, but finding that item may require looking at each part of the floor individually, a far more tedious task. As noted, sometimes one needs to change one's level of abstraction.

I went through the CS course at MIT in the 1970s and more recently helped my niece get through the CS course at Stanford. I found the courses highly relevant to my career just as my niece found them equally relevant. There were differences. For example, the P=NP problem had not been formulated as such when I took CS, but I had taken a course addressing computational complexity so the ideas were familiar. We both studied algorithms even though Knuth literally wrote the book in time for my undergraduate education.

It's easy for someone managing a company, and so dealing at a very high level of abstraction, to argue that what one learns in a CS course isn't relevant or useful in one's career. The reality is that it is immensely important. Just as studying poetry teaches one about rhyme, scansion, metaphor and allusion, studying CS teaches one about complexity, modularity, experimental design and algorithmic expression. Having studied CS then and now, I found that things had changed remarkably little. Top level executives working at the highest level of abstraction are often the most clueless about what is happening in their organizations and in their business.

Orzel makes some good points about the tension between learning and getting credentials, but this tension has always been there. There have always been ways to cheat. Oxford was notorious for granting degrees to the sons of aristocrats on a handshake basis but still managed to crank out a good supply of the literate, numerate and otherwise educated. Some of them were even the sons of aristocrats. LLMs just mean that credential granting institutions need to change some of their tactics.