Background: The OpenAI Coup: Mistaking the Deployment of a Hallucinatory Stochastic-Part ChatBot for the Trump of Doom

Takes on OpenAI...

Henry Farrell: What OpenAI shares with Scientology: ‘The back-story to all of this is actually much weirder than the average sex scandal…. [As] Large Language Models (LLMs) like OpenAI’s GPT-4… have become increasingly powerful, theological arguments have begun to mix it up with the profit motive…. Science fiction was the gateway drug, but it can’t really be blamed for everything…. Faith in the Singularity has roughly the same relationship to SF as UFO-cultism…. The combination of cultish Singularity beliefs and science fiction has influenced a lot of external readers, who don’t distinguish sharply between the religious and fictive elements, but mix and meld them to come up with strange new hybrids…. In the 2010s, ideas about the Singularity cross-fertilized with notions about Bayesian reasoning and some really terrible fanfic to create the online “rationalist” movement….

A crowd of bright but rather naive (and occasionally creepy) computer science and adjacent people try to re-invent theology from first principles…. All sorts of strange mental exercises, postulated superhuman entities benign and malign and how to think about them; the jumbling of parts from fan-fiction, computer science, home-brewed philosophy and ARGs to create grotesque and interesting intellectual chimeras; Nick Bostrom, and a crew of very well funded philosophers; Effective Altruism, whose fancier adherents often prefer not to acknowledge the approach’s somewhat disreputable origins…

Do not call the technologies that OpenAI is currently researching and deploying “AI” (Artificial Intelligence), and really really really do not call them “AGI” (Artificial General Intelligence). They are not that. Call them “LLM-GPT-ML” (Large Language Models-General Purpose Transformers-Machine Learning) instead. As these technologies have come to the fore over the past two years, they have had three roles:

The Next Big Thing post-crypto bubble for separating somewhat gullible venture capitalists and wannabees from their money.

Very high-dimension big-data classification and regression analysis.

Actually useful and non-annoying voice interface to databases.

Calling these technologies “AI” greatly reinforces their ability to perform the first of these roles. This first role is a bad role. It should be squelched.

In its second role these technologies are, so far at least, useful but incremental improvements in our previous capabilities to classify and predict. They are useful, and are going to be increasingly useful as they are further developed. But they are unlikely to be earth- or economy-shaking. It is very nice that my computer can now do background processing to not just pick out photos I have taken in which a dog appears but also correctly classify the black-muzzled 70-lb. dog as “Hunter”:

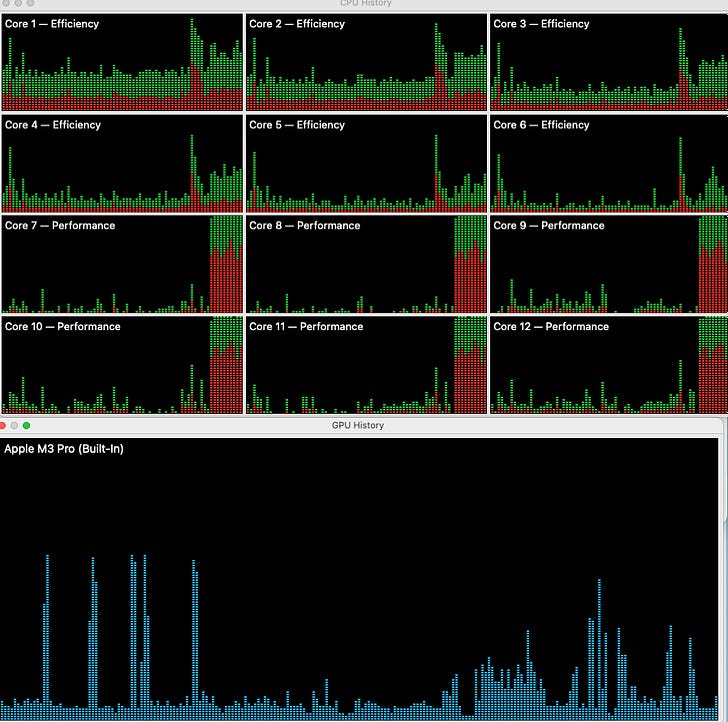

And it is nicer still that it can do the background processing needed without even breaking a sweat so that it becomes instantly responsive when I actually need all the CPU cores for something:

But while this makes me happy that my bank account is now lower by some $3,500, it does not transform the world.

It is in its third role—actually useful and non-annoying voice interface to databases—that these LLM-GPT-ML technologies are potentially earth- and economy-shaking. So much of our communication is by voice (and text) that information technologies that take advantage of this sweet spot of our interaction affordances promise to reshape profoundly perhaps 1/5 of what all white-collar workers and information-technology consumers do. Never mind that they are hallucinatory stochastic parrots. They can give us the capability to think and do a lot much more quickly.

In this, they are like, successively:

the mainframe

the PC

the internet

the smartphone

social media—although maybe that one should have a minus sign attached to it

They will change how we work and play, how our societies’ communications networks function, and how a great deal of money flows. There are serious, serious problems of adapting and deploying these technologies so that they redound to our benefit.

And, of course, these technologies are not yet ready—and may never become fully ready—for prime time as voice interfaces to databases. Yes, it is much easier than conducting Google searches for me to just ask ChatGPT4 questions to learn the things I want to know about Louis XIV Bourbon’s nephew Philippe II Duc d’Orleans (1674-1723), Regent of France 1715-1723. But it is then a problem for ChatGPT4 that it gets confused and conflates information about Philippe (1674-1723) with an earlier Philippe (1336-1376) and a later Philippe (1747-1793; grandson of Philippe-the-Regent). This is not a problem for me because I know that there are different Philippes, and know to check for hallucinations by the ChatBot. But fitness for prime-time use is still beyond OpenAI’s grasp. Its ChatBots do not know that Wikipedia’s human name-disambiguation pages are strongly relevant guides to what answers they should provide.

This is not, plausibly, on the road to AGI any more than one could plausibly set up a project to train parrots to staff call centers.

But it may become effective non-annoying voice interface to databases—it is not yet there. And if it does, that in itself will be a really big deal.

Camilla Hodgson & George Hammond: Who were the OpenAI board members that sacked Sam Altman?: ‘A former Facebook executive, an AI researcher, a tech entrepreneur and a computer scientist… Adam D’Angelo, Helen Toner, Tasha McCauley and Ilya Sutskever[‘s] oust[ing] Sam Altman set off a dramatic chain of events…. How the four had come to hold the keys to the future direction of the leading AI company remains unclear. Neither investors nor staff could explain how the slimmed-down board, which is half the size it was in 2021, was appointed…. The board offer[ed]… no specific reason for their decision beyond saying he had not been “consistently candid”…. One person who worked with D’Angelo… said he was a poor communicator and that the board’s lack of communication was “not surprising”…. McCauley… like Toner, is a supporter of effective altruism—an intellectual movement that has warned of the risks that AI could pose to humanity…. Sutskever… later realigned himself with Altman, saying he “deeply [regretted] my participation in the board’s actions”…

And from Wednesday’s Wall Street Journal:

Keach Hagey, Deepa Seetharaman, & Berber Jin: Behind the Scenes of Sam Altman’s Showdown at OpenAI: ‘The [OpenAI] executive team… pressed the board over the course of about 40 minutes for specific examples of Altman’s lack of candor…. The board refused, citing legal reasons…. The board agreed to discuss the matter with their counsel. After a few hours, they returned…. They said that Altman wasn’t candid, and often got his way… [but] had been so deft they couldn’t even give a specific example…

The total explanation we have for the Board’s action consists of three things:

The first is the “OpenAI Announces Leadership Transition” November 17 blog post:

The board of directors of OpenAI, Inc., the 501(c)(3) that acts as the overall governing body for all OpenAI activities, today announced that Sam Altman will depart as CEO and leave the board of directors…. Mr. Altman’s departure follows a deliberative review process by the board, which concluded that he was not consistently candid in his communications with the board, hindering its ability to exercise its responsibilities. The board no longer has confidence in his ability to continue leading OpenAI….

OpenAI was deliberately structured to… ensure that artificial general intelligence benefits all humanity. The board remains fully committed to serving this mission…. We believe new leadership is necessary as we move forward…. While the company has experienced dramatic growth, it remains the fundamental governance responsibility of the board to advance OpenAI’s mission and preserve the principles of its Charter…

The second is Board member Ilya Sutskever as reported by the Information saying the Board members:

stand by their decision as the ‘only path’ to defend the company’s mission…. Altman’s behavior and board interactions undermined its ability to supervise the company’s development of artificial intelligence…

The third is the Board majority’s claims that:

Altman wasn’t candid, and often got his way… [but] Altman had been so deft they couldn’t even give a specific example…

in its video call with the OpenAI executive team.

What if there is some logic to justify gross breaches of protocol and, apparently, rational and civil behavior? What if they are—in their own minds at least—but made north-northwest, and do know a hawk from a handsaw?

It seems worthwhile to try to figure out what they might have been thinking—why they thought it was necessary that the four of them conspire, freeze the CEO and the Board chair out of their meetings, and then announce the removal of the Board chair and the firing of the CEO without ever having had an actual meeting of the Board to discuss issues.

And here I think Henry Farrell has it right: they went off into woo-woo land.

The place to start is that sometime in the range from the year -167 to the year -164 someone decided it would be a good idea to write a story about a dream—a vision by night—that they would claim the -500s figure of the prophet Daniel (“The LORD is my judge”) had had 400 years before:

I saw in my vision by night…. The Ancient of Days did sit, whose garment was white as snow, and the hair of his head like the pure wool: his throne was like the fiery flame, and his wheels as burning fire. A fiery stream issued and came forth from before him: thousand thousands ministered unto him, and ten thousand times ten thousand stood before him: the judgment was set, and the books were opened…. The beast was slain, and his body destroyed, and given to the burning flame….

And, behold, one like the Son of Man came with the clouds of heaven, and came to the Ancient of Days, and they brought him near before him. And there was given him dominion, and glory, and a kingdom, that all people, nations, and languages, should serve him: his dominion is an everlasting dominion, which shall not pass away, and his kingdom that which shall not be destroyed…

As Henry Farrell puts it, believers in Effective Altruism, Singularity Version really do hold that:

The risks and rewards of AI are seen as largely commensurate with the risks and rewards of creating superhuman intelligences, modeling how they might behave, and ensuring that we end up in a Good Singularity, where AIs do not destroy or enslave humanity as a species, rather than a bad one…. Not everyone working on existential AI risk (or ‘x risk’ as it is usually summarized) is a true believer…. Most, very probably, are not. But you don’t need all that many true believers to keep the machine running…. Even if you, as an AI risk person, don’t buy the full intellectual package, you find yourself looking for work in a field where the funding, the incentives, and the organizational structures mostly point in a single direction….

It is at best unclear that theoretical debates about immantenizing the eschaton tell us very much about actually-existing “AI,” a family of important and sometimes very powerful statistical techniques, which are being applied today, with emphatically non-theoretical risks and benefits. Ah, well, nevertheless. The rationalist agenda has demonstrably shaped the questions around which the big AI ‘debates’ regularly revolve…. We are on a very strange timeline…. In AI, in contrast, God and Money have a rather more tentative relationship. If you are profoundly worried about the risks of AI, should you be unleashing it on the world for profit? That tension helps explain the fight…