Notes, Not so & Briefly Noted: For 2023-03-25 Sa

Forthcoming Narayan Lecture; private CFR "Gilded Ages" panel; what economists miss; strange gibberish webcomics; cracking the productivity-slowdown ice?;

My Notes:

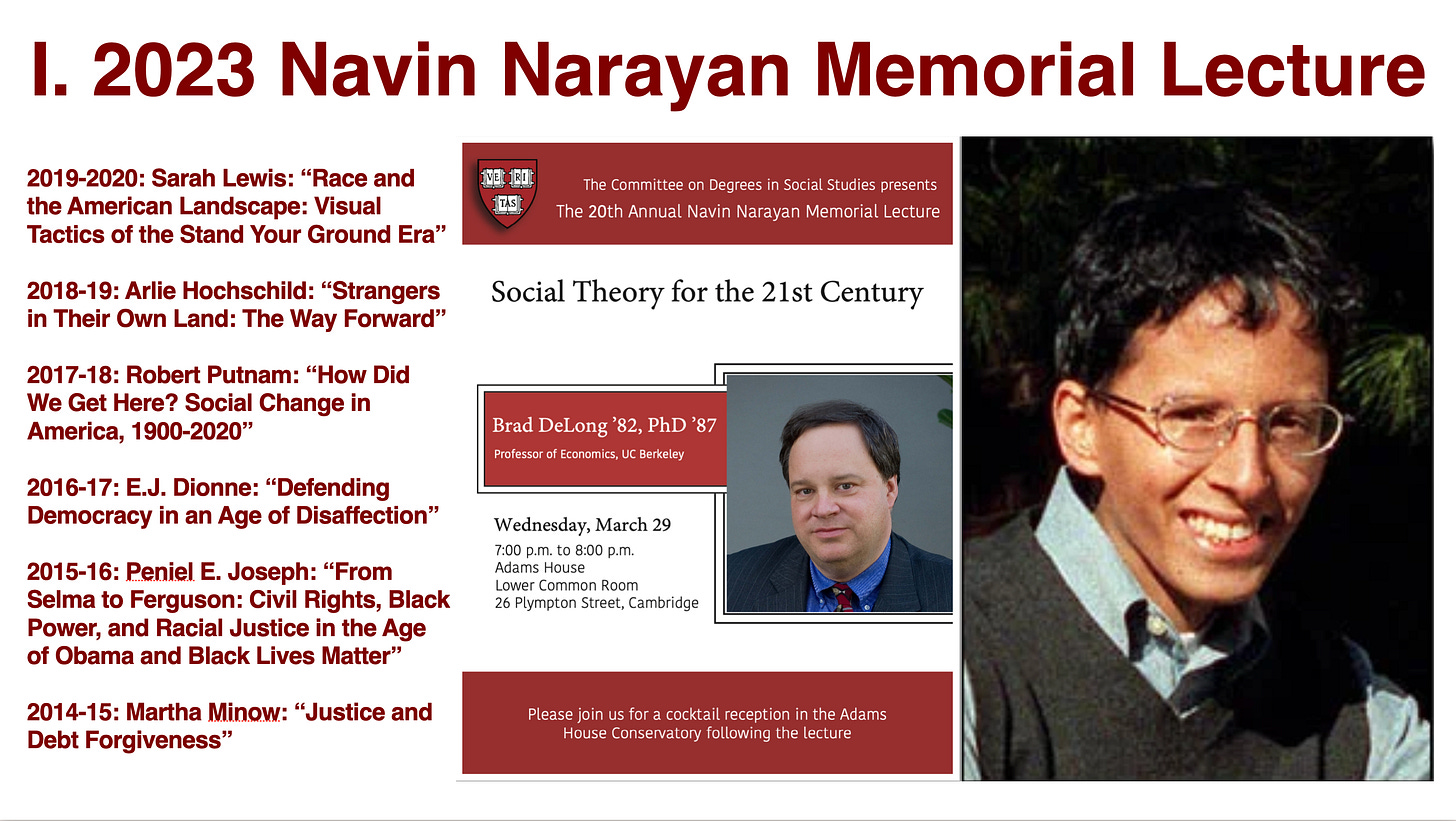

Objects in Your Calendar Are Closer Þan Þey Appear:

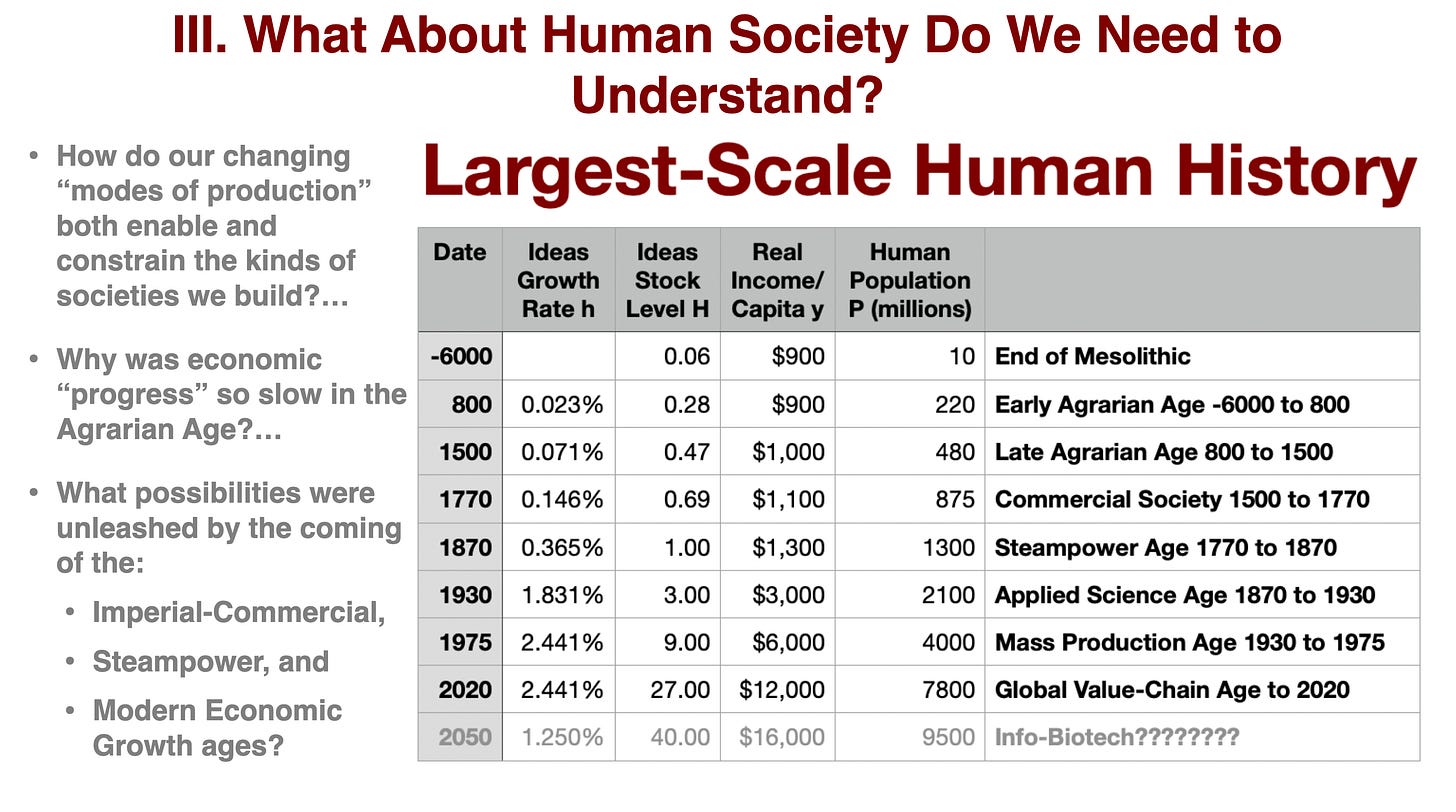

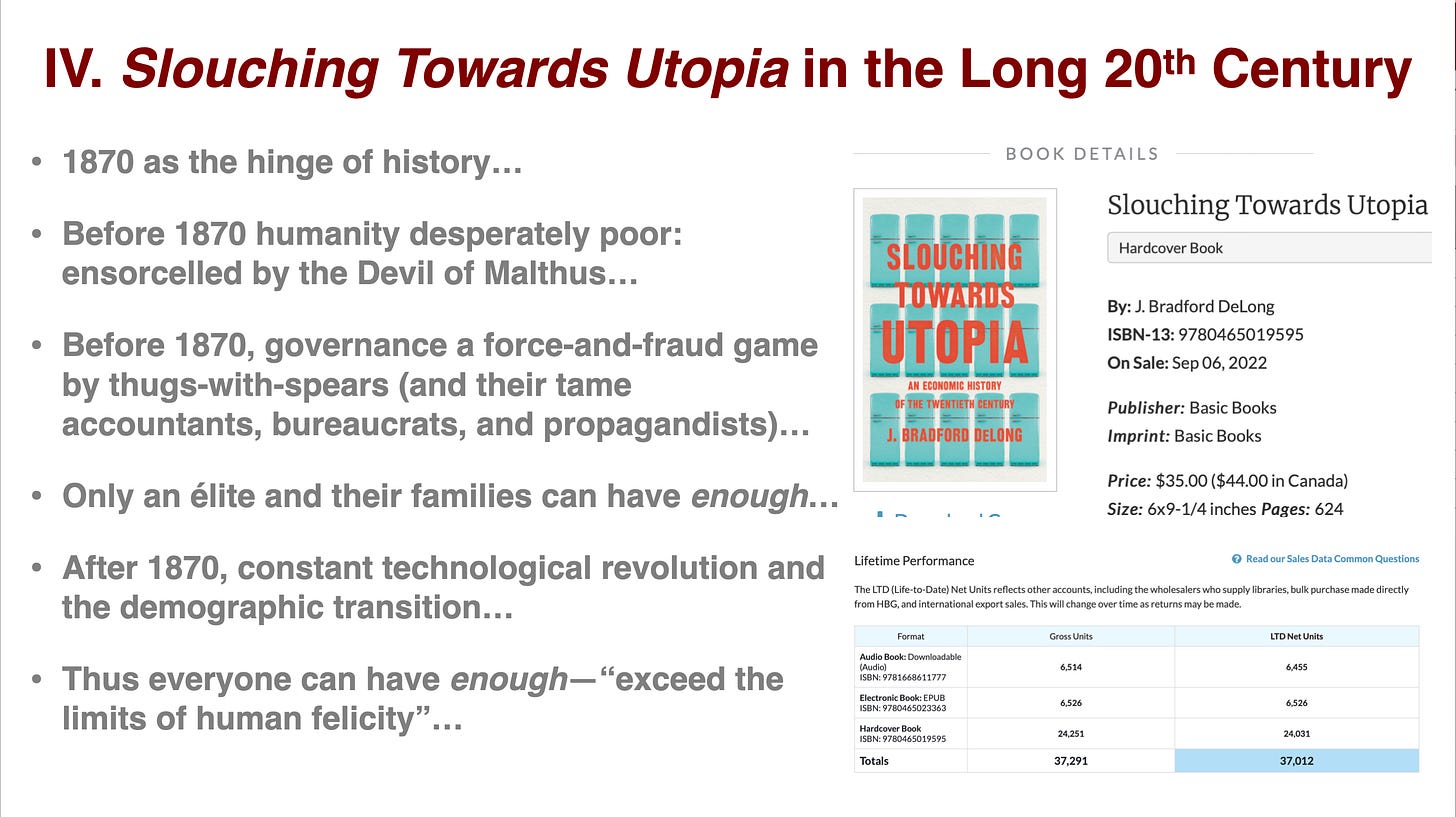

Prliminary Slides:

From a March 21 Council on Foreign Relations Panel wiþ Nancy Unger & Gideon Rose on Analogies Between þe First (1870-1914) & Second (1981-?) Gilded Age:

Rapid industrialization and urbanization in the first.

Technological growth and economic inequality in both.

“Populism” (if we can call it that today) in both.

I think calling today’s movements “populist” hides much more than it illuminates—call them “fascist” or “Cæsarist” instead.

Immigration backlash in both—but no Teddy Roosevelt figures.

Optimism in the first Gilded Age fueled by belief that expertise and passion could be harnessed to address societal issues.

Second Gilded Age different because everyone knows we have seen this pattern before.

The populists of the late 1800s had policy ideas that were more grounded in reality than those of today’s “populist” movements.

Racism and ethnocentrism as distractors.

Oscillations between periods of market liberalization on the one hand and periods of regulation, redistribution, and social protection on the other.

Interesting Q&As:

Technocratic expertise on the one hand, and the weaponization of ignorance on the other.

Bacl in the First Gilded Age, all thought reliable information was their friend—all could agree on the importance of labor statistics and think tanks.

Contrast the striking rejection of truth in favor of truthiness in the second.

Why isn’t there a rôle for a Teddy Roosevelt figure in the Republican Party today?

LLMs and other GPTs (General-Purpose Transformers) as the third (fourth?) GPT (General-Purpose Technology) generated out of the transistor.

Optimism of the will is fine! But maybe a little more pessimism-of-the-intellect, and a little less ostrich-like behavior?

Alas! The CFR wants to hold the contents of its panels very close…

ONE VIDEO: What Economics Is Missing:

ONE IMAGE: Secret Complete Gibberish Prompts Generate Strange Webcomics:

MUST-READ: Cracking þe Ice of þe Productivity Slowdown?:

I have been thinking this too, though with much less confidence than Ethan Mollick:

Ethan Mollick: And the great gears begin to turn again...: ‘Generative AI… early indications are that it can act as a shortcut for many different kinds of tasks… documents… designs… slide decks…. Old constraints on managerial work might change very quickly…. Our clearest sign… is… programming. AI tools have used extensively in programming over the past year, and it seems to make a big difference. In a recent randomized controlled trial of Copilot,an AI programming companion (conducted by Microsoft, who owns the tool), using AI cut the time required to complete a programming task in half…

We cannot see the invention and early diffusion of the personal computer in the aggregate productivity statistics. What we can see in the statistics is the combination of the late diffusion of the personal computer and the internet in the aggregate, productivity statistics and in the worldwide distribution of economic activity. But then we cannot see Web2 at all.

How many organizations will find themselves suddenly able to do much more over the next five years? And how much of that “much more” will be zero-sum—will simply increase the information-processing load on organizations downstream of those take advantage of a Generative AI programming revolution?

Very Briefly Noted:

Pilita Clark: Years of climate scepticism have done untold damage: ‘Erroneous claims, scientific caution and poor media coverage held back policymaking on global warming…

BSurveillance: ‘Markets reevaluate recessions and rate cuts in the post-fed hangover Join

@tomkeene, @FerroTV, & @lisaabramowicz1 for the conversations that power your day on Bloomberg TV, Bloomberg Radio & the Surveillance podcast: <https://trib.al/u4F6k5E>…

F.J. Dyson: The Radiation Theories of Tomonaga, Schwinger, and Feynman…

Apoorva Mandavilli: ‘We Were Helpless’: Despair at the C.D.C. as the Pandemic Erupted: ‘Current and former employees recall rising desperation as Trump administration officials squelched research into the new coronavirus…

Mark Johnson: ‘The substrate doesn't really matter, as Hammond says: “the discovery that giant inscrutable matrices can, under the right circumstances, do many things that otherwise require a biological brain is itself a striking empirical datum.” If computational things can play the language game, then we should treat them as thinking things, regardless of what's sitting below the language coming out the other side…

Steve M.: Not Indicting Trump Will Also Make Him More Popular with the Base: ‘He'll be the superhero depicted in those ridiculous NFTs. He beat Soros! He beat the Deep State! He beat the swamp!…

ESG Hound: The Death of a Bethlehem Mill: ‘A look at a 20th century industrial marvel through the lens of capitalism and sustainability…. Sparrow’s Point… Baltimore…

Josh Marshall: DeSantis Stumbles Over Ukraine: ‘D.C. insiders’ assumptions… have shifted…. The main driver in the shift is D.C. opinion-makers seeing how much Trump’s expected indictments narrow the path for anyone to unseat him as the leader of the GOP. And it was crazy narrow already. Meanwhile, Ron DeSantis is showing he just lacks the dexterity to accomplish it…

¶s:

Steve Schmidt: Access journalism: delusion and arrogance are a bad combination: ‘Twenty years ago, before she joined Fox News and NewsMax, Judith Miller was a New York Times superstar and a Pulitzer Prize winner. More than any other reporter in America, she advanced the idea that Iraq had weapons of mass destruction that justified preemptive war. She was in the access business, and her ambition was insatiable. The simple truth is that without The New York Times and Judith Miller the pro-war ideologues would never have been successful in their misinformation strategy. She became the conduit for all manner of politicized misinformation that asserted that Saddam Hussein had a vast arsenal of weapons of mass destruction. She was the author of dozens of stories, all based on anonymous sources that made claims that were untrue. She was manipulated by everyone from Ahmed Chalabi to Scooter Libby without much resistance. Her anonymous sources were presented to the world as credible, and their false claims were presented as cold hard facts on the front pages of The New York Times…

Steve M.: New Dimensions in "Can Only Be Failed": ‘I think many… genuinely believe… the January 6 protesters were innocents who've been unfairly persecuted by a tyrannical regime, so they believe that in any future protest they'll either be arrested for mere speech or brainwashed into behavior that's violent and illegal…. Put this together with the belief many MAGAs have that the Deep State rigs all elections… [and] we're deeper than ever before in the territory of "conservatism can't fail, it can only be failed."… Now they can't even hold a protest march because the left-wing evildoers will inevitably subvert that. So while they really do represent the overwhelming majority of The People, sinister forces make them look like a minority, then make it impossible for them to show how overwhelmingly popular they are…

Steve Yegge: Cheating is All You Need: ‘LLMs are trained on an absolutely staggering amount of data… but that doesn’t include your code. There are two basic approaches…. Fine-tune (or train) on your code…. Bring in a search engine…. You talk to LLMs by sending them an action or query, plus some relevant context…. The context window… [is] the “cheat sheet” of information…. Maybe 100k of text, to input into the LLM as context for your query…. That’s how you talk to an LLM. You pass it a cheat sheet. Which means what goes ON that cheat sheet, as you can probably imagine, is really really important…. You’d better be really goddamn good at fetching the right data to stuff into that 100k-char window. Because that’s the only way to affect the quality of the LLM’s output!…

Dave Troy: Some observations about LLM’s: ‘Math: Can't really do math, doesn't have any model for it. Research: Makes stuff up, can't be trusted, is extremely confident. Coding: Unless it hits the correct example somewhere else, will usually get it wrong. Data Analysis: fakes it until it makes it, but breaks down at any kind of scale. Writing: as likely as not to plagiarize in ways that are hard to predict. If this was an employee or intern, I'd fire it. ;)…

Sam Hammond: We’re all Wittgensteinians now: ‘I see the success of LLMs as vindicating the use theory of meaning, especially when contrasted with the failure of symbolic approaches to natural language processing. Transformer architectureswere the critical breakthrough precisely because they provided an attention mechanism for conditioning on the contextsof an input sequence, allowing a model to infer meaning from full sentences instead of one word at a time. Fregethus had it right over 150 years ago when he said to “never ask for the meaning of a word in isolation, but only in the context of a proposition”…. As today’s preeminent AI skeptic, the above discussion should help put Gary Marcus’s obsession with compositionality into perspective. The principle of compositionality states that "the meaning of a complex expression is a function of the meanings of its constituents and the way they are combined.” According to Marcus, "Large Language Models don't directly implement compositionality — at their peril.” But if Wittgenstein and the inferential pragmatists are right, neither does the human mind. The dogma that models of natural language must have the same sort of compositionality found in programming and mathematics is thus little more than a repeat of the same Cartesian fallacy committed by the logical positivists nearly a century ago…

Ethan Mollick: Acceleration: ‘The disappointing model first. That would be Google’s long-awaited Bard. I have had access for 24 hours, but so far it is… not great. It it seems to both hallucinate (make up information) more than other AIs and provide worse initial answers…. Bing.. solid and even thoughtful-feeling… provides sources (though these can be hit or miss, and Bing still hallucinates, just less often)…. GPT-4… I have been using it to write programs in Python and Unity (programming languages I literally do not know at all!) by just telling it what I want…. Midjourney… now generates photo-realistic pictures with a simple sentence….. Even if AI technology did not advance past today, it would be enough for transformation. GPT-4 is more than capable of automating, or assisting with, vast amounts of highly-skilled work without any additional upgrades or improvements. Programmers, analysts, marketing writers, and many other jobs will find huge benefits to working with AI, and huge risks if they do not learn to use these tools…. The only way to prepare for the future is to get as comfortable as possible with the AIs available today. Everyone should practice using them for work and personal tasks, so that you can understand their uses and limits. Things are only going to accelerate further from here…

Blame for the Iraq war. Let's leave a bit for MOVE ON's "It's about oil." meme.

Mandavilli; Not to minimize having to navigate the Trump noise machine, but WAS the CDC really tying to get the best, constantly changing information into the hands of the public and policy makers so THEY could decide on the most cost effective decisions [and leaning on the FDA to promote cheap and dirty screening tests to lower costs of spread prevention]?