Discover more from Brad DeLong's Grasping Reality

CONDITION: Filibuster Elimination Threat:

When I arrived in Washington, in the spring of 1993, the very wise then-Assistant Secretary of the Treasury for Legislative Affairs Mike Levy told me that things would pass the senate only under three conditions:

They were Republican priorities that enough Democrats thought were on balance good for the nation, or

They were done via the Reconciliation process, or

Republicans would go along because Democrats would credibly threaten to use Reconciliation to pass something less good for the country that Republicans would dislike even more.

He was wise.

Now we need to add:

(4) Things pass because if they don’t Manchin and Sinema will be really pissed, and might vote to get rid of the filibuster.

This is how I think it will be in the Senate, for a while.

The House over the next two years will be weird: it will be almost impossible for McCarthy to control the Republican caucus, so the things that will pass the House will be:

Republican priorities where Speaker McCarthy will induce and Hakeem Jeffries will allow enough Democrats to join the coalition to make up for Republican defections.

Democratic priorities that moderate Republicans think are good for the nation where Shadow-Speaker Jeffries will convince ten or so moderate Republicans to tell McCarthy to go f*** himself.

Joseph Zeballos-Roig: Big scandal, weird scandal: ‘Sen. Todd Young, the Indiana Republican who helped author one of this year’s highest-profile bipartisan bills, recently offered his theory…. Working across the aisle helped Republicans keep the filibuster alive in a 50-50 Senate by giving… Manchin… and… Sinema… wins…. “[Republican] leadership [did not] try to blow up deals,” Young said… McConnell…. “We wanted… center-right or center-left deals that had broad appeal… but as importantly, kept Sinema and Manchin happy so they didn't join the 'eliminate the filibuster' crowd. It was a wise calculation.” McConnell said much the same…. Passing the bipartisan bills was in the “best interest of the country,” it also “may have reassured” Manchin and Sinema…. Young also suggested CHIPS would have drawn more GOP support under a Republican administration. “I had countless colleagues approach me and say that they believed in this investment and believed it was important to national security and economic security,” he said. “But they shared with me this was a very hard argument for some constituents”…

FOCUS: Perhaps Some Stochastic Parrots Are Intentional?:

“Tell me a story”, I prompt Chat-GPT:

What is Chat-GPT doing here? We are told:

Murray Shanahan: Talking About Large Language Models <https://arxiv.org/pdf/2212.03551.pdf?ref=the-diff> ‘[It] has no communicative intent… no understanding… does not… have beliefs…. All it does, at a fundamental level, is sequence prediction…. Knowing that the word “Burundi” is likely to succeed the words “The country to the south of Rwanda is” is not the same as knowing that Burundi is to the south of Rwanda…. A compelling illusion…. Yet… such systems are fundamentally not like ourselves… present… a patchwork of less-than-human with superhuman capacities, of uncannily human-like with peculiarly inhuman behaviours…. It may require an extensive period of interacting with, of living with, these new kinds of artefact before we learn how best to talk about them. Meanwhile, we should try to resist the siren call of anthropomorphism…

Does this really get it right? Yes, we should resist “the siren call of anthropomorphism”. But is it right to say that there is no intentionality here?

My punchline: I disagree with Shanahan. There is intentionality. But we have to be precise about just whose.

Consider: Yes, what Chat-GPT has done in response to my prompt is to predict a sequence of tokens. But what sequence did it predict?

It took all of the sequences of tokens—words—it has available, all of those in which a human being responded to "tell me a story” in some way, plus some others. It then took those sequences, put them, in a bag, shook them, and printed what came out. Chat-GPT had no intention of “telling me a story”.

But each of the sequences of tokens that went into Chat-GPT had, attached to it, a human who did intend to tell a story. Thus all the intentions of all the people who ever told a story—they lie behind the token sequences are its raw material. Each of the sequences in its database as intentionality.

Can there be a sense in which averaging a huge number of speech acts, each of which has intentionality, removes the intentionality?

Well, no… and yes. Yes, in that what makes things intentional is precisely the individuality of the thought process, and that is what is averaged away.

But also no…

Remember the “Chinese-Speaking Room”?

Wikipedia: Searle… supposes that he is in a closed room and has a book with an English version of the computer program, along with sufficient papers, pencils, erasers, and filing cabinets. Searle could receive Chinese characters through a slot in the door, process them according to the program's instructions, and produce Chinese characters as output…. Searle himself would not be able to understand the conversation. ("I don't speak a word of Chinese," he points out)...

It is true that if you accept Searle’s framing—a sentence in Chinese comes into the room, and he then consults the books in the room to decide how to respond—then to have the complexity of a human brain, the “Chinese-Speaking Room” would have to be the size of the globe, and managed by 50,000 robots traveling at lightspeed. The system that is the room understands Chinese just as well (or poorly) as a human speaker of Chinese does,

My view is that Chat-GPT already has that branch of intentionality that humans have when they think “I am going to write a grammatical sentence”, because that intentionality was shared by virtually everyone whose writings have gone into the bag. And, similarly, if we can elicit responses from GPT that are derived from a community of humans every one of which used that response with the same intention, I have no problem ascribing intention to Chat-GPT.

For example:

Every human who has ever used the sentence “Colorless green ideas sleep furiously” has done so not to assert that colorless green ideas sleep furiously, but to illustrate the huge gulf between syntax and semantics.

So I have no problem ascribing that intention to Chat-GPT, just as I have no problem—at least adopting the intentional stance—with saying that Chat-GPT intends to write grammatical sentences.

On the other hand:

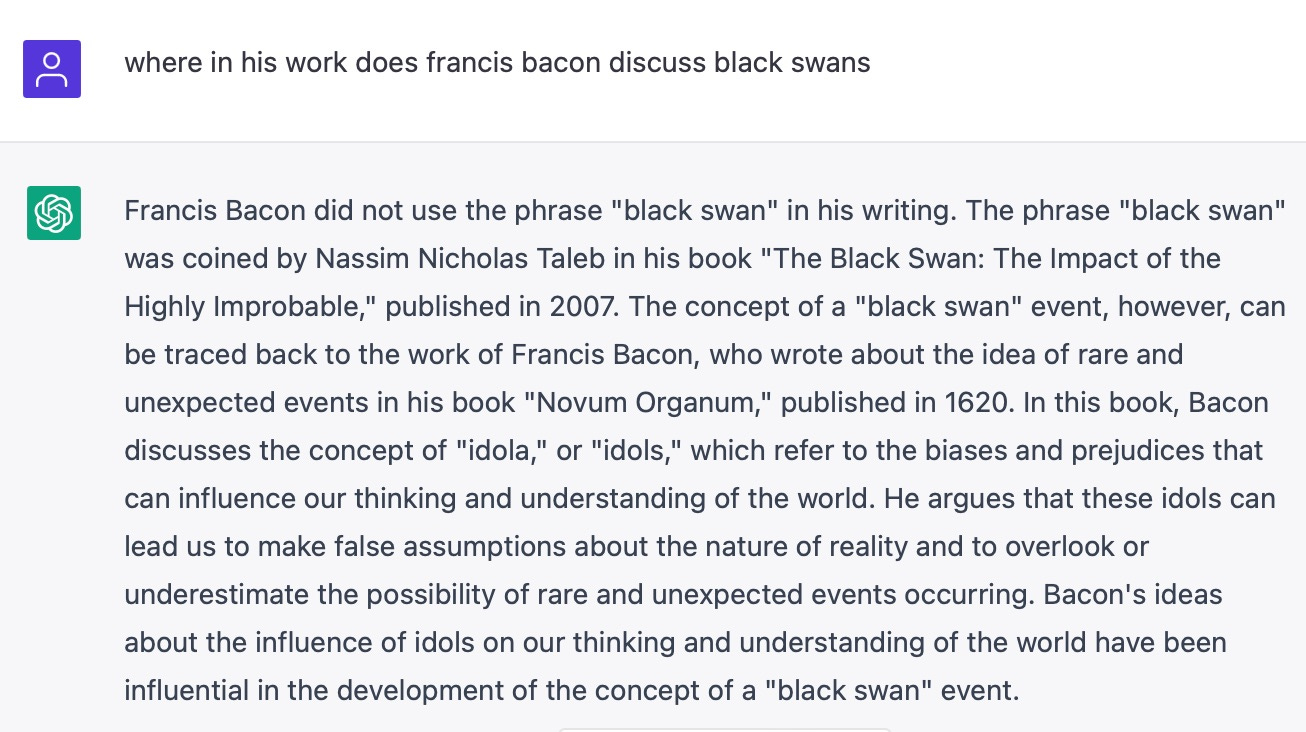

I remember reading about “black swans” in 1973 (p. 350 of Time Enough for Love <https://archive.org/details/timeenoughforlov0000hein_t4d7/page/350/mode/2up?q=swan>). The phrase was certainly not coined by N.N. Taleb. And I very much doubt that it was coined by Francis Bacon.

There is no intentionality here. We have a long, long way to go. Chat-GPT costs, I guess, $30 million to train and then $0.10 per 300-word answer—$1 for a full 3000-word chat. Unless there are clever software improvements I cannot envision, perhaps we need a 100-fold increase in deployed computrons for a truly satisfactory conversation: $100 per consultation. And I do not think that Moore’s Law is going to save us.

There is at least the possibility that here we have a task where using wetware is going to always be cheaper than using silicon hardware…

Isn't ChatGPT just another way to waste time on the Internet? I may be one of the few Americans who does not have either a Twitter or Facebook account and my only guilty pleasures are a handful of Substack writers. If the Sustackers start to slack off in terms of content, it's easy to say adios and move on to something else.

There was a contest at Carnegie Mellon back in the 1980s to come up with the best meaningful use of “Colorless green ideas sleep furiously”. The winner was along the lines of:

"Through the icy winter, the seeds lie dormant. Before the frost sets in, eager gardeners peruse colorful catalogs promising the fertile glories of the spring. They plant with hope, and through the long winter those colorless green ideas sleep furiously."

It isn't about intention. It's about having a useful model. You can ask what country is south of Burundi and get a good answer without a model, but can you then ask what country is south of that or to its west? How about two countries south, or three? With a model, for example a mental or physical map of Africa, those answers are easy. Without a model, getting a good answer requires someone to have asked the question before and enough conversational ability to track the conversation and supply an antecedent for "that".

The lack of a world model is a major problem. One glaring issue with image generators, for example, is that they have no model of number. If you ask for three eagles, you will likely get three eagles, but if you ask for four or five, you have run into one of those primitive intelligences that cannot count. There's a similar problem with protein folding or reaction prediction. If the space has been densely explored and the question involves simple proximity, the system can give fairy good answers. It falls apart completely in parts unknown or when needing to make an actual inference.