Can We Provide Algorithmic Society with a More Human Face than Was True of Market, Bureaucratic, or Command-&-Control Society?

Proposals for tuning the latest—algorithmic—caterwauling accompaniment to the zombie dance of human society; as we enter the mode of production of the algorithm: of the General-Purpose Transformer...

Proposals for tuning the latest—algorithmic—caterwauling accompaniment to the zombie dance of human society; as we enter the mode of production of the algorithm: of the General-Purpose Transformer, of Large Language Models, and of Advanced Machine Learning…

From Francis Spufford: Red Plenty:

Marx had drawn a nightmare picture of what happened to human life under capitalism, when everything was produced only in order to be exchanged; when true qualities and uses dropped away, and the human power of making and doing itself became only an object to be traded. Then the makers and the things made turned alike into commodities, and the motion of society turned into a kind of zombie dance, a grim cavorting whirl in which objects and people blurred together till the objects were half alive and the people were half dead.

Stock-market prices acted back upon the world as if they were independent powers, requiring factories to be opened or closed, real human beings to work or rest, hurry or dawdle; and they, having given the transfusion that made the stock prices come alive, felt their flesh go cold and impersonal on them, mere mechanisms for chunking out the man-hours. Living money and dying humans, metal as tender as skin and skin as hard as metal, taking hands, and dancing round, and round, and round, with no way ever of stopping; the quickened and the deadened, whirling on.

That was Marx’s description, anyway.

And what would be the alternative? The consciously arranged alternative? A dance of another nature, Emil presumed. A dance to the music of use, where every step fulfilled some real need, did some tangible good, and no matter how fast the dancers spun, they moved easily, because they moved to a human measure, intelligible to all, chosen by all…

Spufford, Francis. 2012. Red Plenty. Minneapolis, Minnesota: Graywolf Press. <https://archive.org/details/redplenty0000spuf>

I have not yet taken my shot at what the transformation from the global-value chain mode of production to the attention-algorithmic info-biotech mode of production will mean. At most, I have declared my intention to take my shot:

[1] Brad DeLong: The Music to the Zombie Dance of Human Society Changes Its Key: ‘What is my take on this—on how we are constrained, and thus alienated, by alien powers that we do not understand, cannot control, and that are cold and indifferent to us even though they are the products of our own minds and are, in fact, composed of our own individual human actions as they are patterned by our societal institutions and our cultural practices?…

By 750,000 years ago the… East African Plains Ape… had become a limited time-binding anthology intelligence…. What one person in the band… knew, everyone else could learn. Moreover, what one ancestor had… [of] member of a neighboring band knew… incorporated into the culture, the entire band could learn. And in addition, there was the division of labor…. We as an anthology intelligence were very smart and knowledgeable. We as individual East African Plains Apes were pretty dumb. We still are. Even today, with our brains of 1400 ml twice as large as those of homo erectus, we are lucky if we can remember in the morning where we had left our keys last night….

Time passed…. More-or-less in order, we developed the productive (and unproductive) anthology intelligence-intensification communication technologies of: Language. Writing. Printing. Mass media. Social media. Algorithmic feeds. And we developed the social-organization cultural technologies of: Dominance. Prestige. Reciprocal gift-exchange. Redistribution. Propaganda. Charisma. Honor. Market economy (which is something much more than reciprocity). Bureaucracy (which is something much more than redistribution). Algorithmic classification…

[2] Brad DeLong: Where Asymptote?: Trying to Think About GPT-LLM-ML: ‘Modern “AI” is, I now think, seven things. And calling it “AI” is a source of confusion. It is not “artificial intelligence”. It is Transformer models, large-language models, and machine-learning models. And the seven things are: 1. Auto-complete for everything on ‘roids. 2. The post-crypto boom way of separating gullible VCs from their money for the benefit of engineers, speculators, utopians, and grifters. 3. Very large-scale very high-dimension regression and classification analysis. 4. Natural-language interface to databases–so far, specifically, databases of coding examples. 5. The intersectional combination of (3) and (4) surveying the internet, in the form of ChatGPT, DALL-E, and so forth. 6. Highly verbal electronic-software pets, and coaches. 7. The latest of the so-many outbreaks of American religious cults that we have seen regularly since the First Great Awakening of Jonathan Edwards and George Whitefield in the 1730s.

Henry Farrell and Cosma Shalizi are, however, now taking their shot. And all I can carve out time to do is watch and try to follow them where they are going… “ROADS. Where we are going, we won’t need ROADS!…”

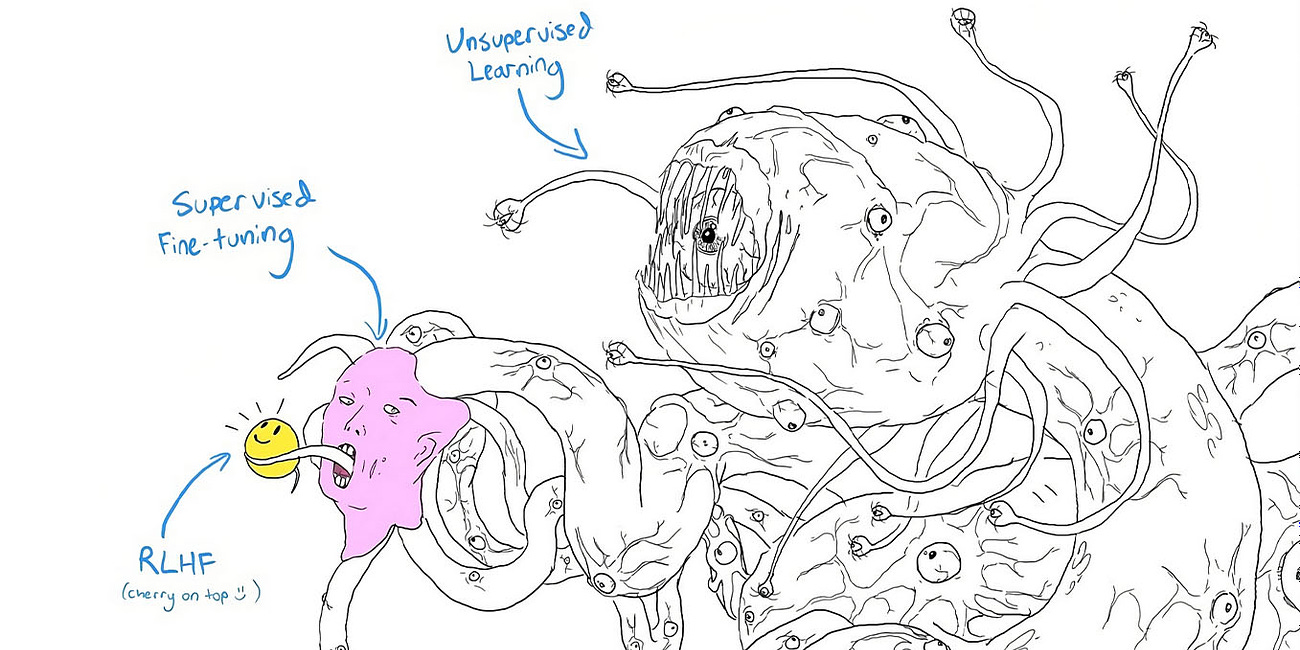

Henry Farrell & Cosma Shalizi: Artificial intelligence is a familiar-looking monster: ‘Large language models have much older cousins in markets and bureaucracies…. As the economist Friedrich Hayek argued, any complex economy has to somehow make use of a terrifyingly large body of disorganised and informal “tacit knowledge”…. No individual brain or government can possibly comprehend them…. But the price mechanism lets markets summarise this knowledge and make it actionable…. James Scott has explained how bureaucracies are monsters of information, devouring rich, informal bodies of tacitly held knowledge and excreting a thin slurry of abstract categories that rulers use to “see” the world. Democracies spin out their own abstractions. The “public” depicted by polls and election results is a drastically simplified sketch of the amorphous mass of opinions, beliefs and knowledge held by individual citizens…. Markets and states can have enormous collective benefits, but they surely seem inimical to individuals who lose their jobs to economic change or get entangled in the suckered coils of bureaucratic decisions. As Hayek proclaims, and as Scott deplores, these vast machineries are simply incapable of caring if they crush the powerless or devour the virtuous. Nor is their crushing weight distributed evenly… <https://www.economist.com/by-invitation/2023/06/21/artificial-intelligence-is-a-familiar-looking-monster-say-henry-farrell-and-cosma-shalizi>

Henry Farrell & Cosma Shalizi: Artificial intelligence is a familiar-looking monster: ‘The modern world has been built by and within monsters, which crush individuals without remorse or hesitation, settling their bulk heavily on some groups, and feather-light on others. We eke out freedom by setting one against another, deploying bureaucracy to limit market excesses, democracy to hold bureaucrats accountable, and markets and bureaucracies to limit democracy’s monstrous tendencies. How will the newest shoggoth change the balance, and which politics might best direct it to the good? We need to start finding out… <https://www.economist.com/by-invitation/2023/06/21/artificial-intelligence-is-a-familiar-looking-monster-say-henry-farrell-and-cosma-shalizi>

Henry Farrell: Shoggoths amongst us: ‘Highly personalized relationships allow you to understand the people who you have direct connections to, but they make it far more difficult to systematically gather and organize the general knowledge that you might want to carry out large scale tasks. It will in practice often be impossible effectively to convey collective needs through multiple different chains of personal connection, each tied to a different community with different ways of communicating and organizing knowledge. Things that we take for granted today were impossible in a surprisingly recent past, where you might not have been able to work together with someone who lived in a village twenty miles away. The story of modernity is the story of the development of social technologies that are alien to small scale community, but that can handle complexity far better… <https://crookedtimber.org/2023/07/03/shoggoths-amongst-us/>

Henry Farrell: AI’s Big Rift Is like a Religious Schism: ‘Two centuries ago Henri de Saint-Simon, a French utopian, proposed a new religion, worshipping the godlike force of progress, with Isaac Newton as its chief saint. He believed that humanity’s sole uniting interest, “the progress of the sciences”, should be directed by the “elect of humanity”, a 21-member “Council of Newton”. Friedrich Hayek, a 20th-century economist, later gleefully described how this ludicrous “religion of the engineers” collapsed into a welter of feuding sects. Today, the engineers of artificial intelligence (AI) are experiencing their own religious schism. One sect worships progress, canonising Hayek himself. The other is gripped by terror of godlike forces. Their battle has driven practical questions to the margins of debate… <https://www.economist.com/by-invitation/2023/12/12/ais-big-rift-is-like-a-religious-schism-says-henry-farrell>

Henry Farrell: AI’s Big Rift Is like a Religious Schism: ‘Mr Andreessen published his own… Nicene creed for the cult of progress: the words “we believe” appear no less than 113 times…. His list of the “patron saints” of techno-optimism begins with Based Beff Jezos, the social-media persona of a former Google engineer who claims to have founded “effective accelerationism”, a self-described “meta-religion” which puts its faith in the “technocapital Singularity”…. This schism is an attention-sucking black hole that makes its protagonists more likely to say and perhaps believe stupid things… <https://www.economist.com/by-invitation/2023/12/12/ais-big-rift-is-like-a-religious-schism-says-henry-farrell>

Henry Farrell: The political economy of Blurry JPEGs: ‘What LLMs can do. And what they can't…. LLMs are processors of human created intelligence, rather than putative intelligences in their own right. That brings… political economy… to the center of attention…. We should pay… more [attention] to how these technologies resemble (and differ from) other cultural technologies… substantial economic, social and political changes, with winners and losers, and struggles…. What you get from LLMs is a catalyzed compound of what human beings put in in the first place…. The Summarization Society. How do new technologies for summarizing textual information change what we can and cannot do. If Software Eats the World, What Comes Out the Other End? Generative AI, enshittification, and the scarcity value of high quality knowledge. Who Gets What from LLMs. Fights over copyright as a proxy for the division of spoils. If Markets Could Speak. Big collective systems and the pitfalls of human cognition. The Stories in the Lazaret. What LLMs’ output does, and does not, have in common with fiction. Vico’s Singularity. Vernor Vinge is a fun science-fiction writer—but a mediocre guide to the future of AI… <https://programmablemutter.com/p/the-political-economy-of-blurry-jpegs>

Cosma Shalizi: On Shoggothim: ‘An LLM is a way of taking the vast incohate chaos of written-human-language-as-recorded-on-the-Web and simplifying and abstracting it in potentially useful ways. They are, as Alison Gopnik says, cultural technologies, more analogous to library catalogs than to individual minds. This makes LLMs recent and still-minor members of a larger and older family of monsters which similarly simplify, abstract, and repurpose human minds: the market system, the corporation, the state, even the democratic state. Those are distributed information-processing systems which don't just ingest the products of human intelligence, but actually run on human beings—a theme I have been sounding for while now.… <http://bactra.org/weblog/shoggothim.html>

Cosma Shalizi (2010): The Singularity in Our Past Light-Cone: ‘The Singularity… was over by the close of 1918. Exponential yet basically unpredictable growth of technology, rendering long-term extrapolation impossible (even when attempted by geniuses)? Check. Massive, profoundly dis-orienting transformation in the life of humanity, extending to our ecology, mentality and social organization? Check. Annihilation of the age-old constraints of space and time? Check. Embrace of the fusion of humanity and machines? Check. Creation of vast, inhuman distributed systems of information-processing, communication and control, "the coldest of all cold monsters"? Check; we call them "the self-regulating market system" and "modern bureaucracies" (public or private), and they treat men and women, even those whose minds and bodies instantiate them, like straw dogs… <http://bactra.org/weblog/699.html>

Cosma Shalizi (2012): In Soviet Union, Optimization Problem Solves You: ‘Attention conservation notice: Over 7800 words about optimal planning for a socialist economy and its intersection with computational complexity theory. This is about as relevant to the world around us as debating whether a devotee of the Olympian gods should approve of transgenic organisms. (Or: centaurs, yes or no?) Contains mathematical symbols but no actual math, and uses Red Plenty mostly as a launching point for a tangent… <http://bactra.org/weblog/918.html>

Cosma Shalizi: “Attention", "Transformers", in Neural Network "Large Language Models": ‘The way of thinking about LLMs that I think is most promising and attractive is due (so far as I know) to the cognitive scientist Alison Gopnik, which is to say that they are… information-retrieval…. LLM… like a library catalog. Prompting it with text is something like searching over a library's contents for passages that are close to the prompt, and sampling from what follows. "Something like" because of course it's generating new text from its model, not reproducing its data… <http://bactra.org/notebooks/nn-attention-and-transformers.html>

Cosma Shalizi: “Attention", "Transformers", in Neural Network "Large Language Models": ‘This… irritating and opaque….. But clearly I need to wrap my head around it, before I become technically obsolete. My scare quotes in the title of these notes thus derive in part from jealousy and fear. But only in part: the names here seem like proof positive that McDermott's critique of "wishful mnemonics" needs to be re-introduced into the basic curriculum of AI…. Suppose we have a big collection of inputs and outputs to some function… We now want to make a guess at the value of the function for a new input point... Introduce a kernel function K(u,v) which measures how similar u is to v; this function should be non-negative, and should be maximized when u=v. Now use those as weights [applied to your existing dataset]…. Nadaraya-Watson smoothing, a.k.a. kernel smoothing… <http://bactra.org/notebooks/nn-attention-and-transformers.html>

Cosma Shalizi: “Attention", "Transformers", in Neural Network "Large Language Models": ‘What the neural network people branded "attention" sometime around 2015 was just re-inventing this; x(o) is their "query" vector, the x(i) are their "key" vectors, and the y(i) are their "value" vectors. "Self-attention" means that y(i) =rx(i), for another square matrix r, i.e., there's a linear-algebraic link between the input and output values. (Possibly r=I, the identity matrix.) Again: Calling this "attention" at best a joke. Actual human attention is selective, but this gives some weight to every available vector x(i). What is attended to also depends on the current state of the organism, but here's it's just about similarity between the new point x(o) and the available x(i)'s. (I say "human attention" but it seems very likely that this is true of other animals as well.) (So far as I can tell, the terms "key", "value", and "query" come here from thinking of this as a sort of continuous generalization of an associative array data type. Which you could...) <http://bactra.org/notebooks/nn-attention-and-transformers.html>

Cosma Shalizi: “Attention", "Transformers", in Neural Network "Large Language Models": ‘I would have been very surprised if I was the first to realize that "attention" is a form of kernel smoothing…. Tsai et al. 2019…. I like to think I am not a stupid man, and I have been reading about, and coding up, neural networks since the early 1990s. But I read Vaswani et al. (2017) multiple times, carefully, and was quite unable to grasp what "attention" was supposed to be doing…. "It's Just Kernel Smoothing" vs. "You Can Do That with Just Kernel Smoothing!?!”[:] The fact that attention is a kind of kernel smoothing takes nothing away from the incredibly impressive engineering accomplishment of making the blessed thing work…. I realize that my irritation with the obscurities is a lot clearer in these notes than my admiration for the achievements; chalk that up to my flaws as a writer and as a human being… <http://bactra.org/notebooks/nn-attention-and-transformers.html>

Cosma Shalizi: “Attention", "Transformers", in Neural Network "Large Language Models": ‘"Transformers"…. Look back over the last umpteen thousand characters, and break those into little chunks called "tokens", so a context is say k tokens long…. Each token gets mapped to a unique vector in a not-too-low-dimensional space, 1≪d≪S… "embedding"… learnable parameters, fit by maximum likelihood…. The context is now a sequence of d-dimensional vectors…. Use "attention" to do kernel smoothing of these vectors… to get (say) y(t)…. Push… through a feed-forward neural network to get an S-dimensional vector of weights over token types…. Sample a token…. Forget the oldest token and add on the generated one as the newest part of the context…. We also use encoding of positions within the context…. People get better results if they do the kernel smoothing multiple times… with different w matrices, average the outputs… and then pass the result to the feed-forward neural network. (See above, "multi-headed attention".)… <http://bactra.org/notebooks/nn-attention-and-transformers.html>

Cosma Shalizi: “Attention", "Transformers", in Neural Network "Large Language Models": ‘It's this unit: Read in length-k sequence of d-dimensional input vectors; Do "attentional" (kernel) smoothing; Pass through a narrow, shallow feed-forward network; Spit out a length-k sequence d-dimensional output vector… that constitutes a “transformer”. We pile transformers on transformers until the budget runs out, and at the end we push through one final neural network to get weights over tokens (token types, dammit) and sample from them…<http://bactra.org/notebooks/nn-attention-and-transformers.html>

Cosma Shalizi: “Attention", "Transformers", in Neural Network "Large Language Models": ‘O You Who Believe in the Resurrection and the Last Day…. Because life imitates the kind of schlocky fiction I adoringly consume, the public discussion of these models is polluted by maniacal cultists with obscure ties to decadent plutocrats… people who think they can go from the definition of conditional probability, via Harry Potter fanfic, to prophesying that an AI god will judge the quick and the dead, and condemn those who hindered the coming of the Last Day to the everlasting simulated-but-still-painful fire. ("Impressive act! What do you call yourselves?" "The Rationalists!")… <http://bactra.org/notebooks/nn-attention-and-transformers.html>

Cosma Shalizi: “Attention", "Transformers", in Neural Network "Large Language Models": ‘Whether such myths would be as appealing in a civilization which hadn't spent 2000+ years marinating in millenarian hopes and apocalyptic fears, or whether on the contrary such hopes and fears have persisted and spread over the globe through twenty-plus centuries of transformation because they speak to something enduring in humanity, is a delicate question. But I submit it's obvious we are just seeing yet another millenarian movement. (Sydney/Bing is no more the Beast, or even the Whore of Babylon, than was Eliza.) This isn't to deny that there are serious ethical and political issues about automated decision-making (because there are)…. (Should the interface to the library of human knowledge be… noisy sampler of the Web, tweaked to not offend the sensibilities of computer scientists and/or investors in California?)… [But I do] deny that engaging with the cult is worthwhile… <http://bactra.org/notebooks/nn-attention-and-transformers.html>

Here is the question of the day. Who makes more mone, a Tik-Tok influencer or an algorithm developer? My money goes with the former.