Spreadsheet, Not Skynet: Microdoses, Not Microprocessors

The modest power relative to the size of the economy of GPT LLM MAMLMs as linguistic artifacts. Of course, since the economy is super huge, a relatively modest effect on it is still huge one. But...

The modest power relative to the size of the economy of GPT LLM MAMLMs as linguistic artifacts. Of course, since the economy is super huge, a relatively modest effect on it is still huge one. But more an Excel-class innovation than an existential threat to humanity. Or am I wrong?

I am pretty confident that “AI” will, in the short- and medium-run, have a minimal impact on measured GDP and a positive but limited impact on human welfare (provided we master our attention uses of ChatGPT and company rather than finding others who wish us ill using them to enslave our attention). I just said so, earlier today: J. Bradford DeLong: MAMLM as a General Purpose Technology: The Ghost in the GDP Machine <https://braddelong.substack.com/p/mamlm-as-a-general-purpose-technology>:

But in my meatspace circles here in Berkeley and in the cyberspace circles I frequent, I find myself distinctly in the minority. Here, for example, we have a very interesting but I must regard as weird piece from the smart and thoughtful Ben Thompson:

Ben Thompson: Checking In on AI and the Big Five <https://stratechery.com/2025/checking-in-on-ai-and-the-big-five/>: ‘There is a case to be made that Meta is simply wasting money on AI: the company doesn’t have a hyperscaler business, and benefits from AI all the same. Lots of ChatGPT-generated Studio Ghibli pictures, for example, were posted on Meta properties, to Meta’s benefit. The problem… is that the question of LLM-based AI’s ultimate capabilities is still subject to such fierce debate. Zuckerberg needs to hold out the promise of superIntelligence not only to attract talent, but because if such a goal is attainable then whoever can build it won’t want to share; if it turns out that LLM-based AIs are more along the lines of the microprocessor—essential empowering technology, but not a self-contained destroyer of worlds—then that would both be better for Meta’s business and also mean that they wouldn’t need to invest in building their own…

For the sharp Ben Thompson, he has inhaled so much of the bong fumes that for him the idea that the impacts of LLM-based AIs… along the lines of the microprocessor is the low-impact case. The high-impact case is that they will become superintelligent destroyers of worlds.

This seems to me crazy.

Most important, I have seen nothing from LLM-based models so far that would lead me to classify the portals to structured and unstructured datastores they provide as more impactful than the spreadsheet, but for natural-language interface rather than for structured calculation. And I see nothing to indicate that they are or will become complex enough to be more than that. Back in the day, you fed Google keywords, and it told you what the typical internet s***poster writing about the keywords had said. Poke and tweak Google, and it might show you what an informed analyst had said. That is what ChatGPT and its cousins do, but using natural language, and thus meshing with all your mental affordances in a way that is overwhelmingly useful (because it makes it so easy and frictionless) and overwhelmingly dangerous (because it has been tuned to be so persuasive).

(Parenthetically, Thompson’s conclusion that in the low-impact case Facebook “wouldn’t need to invest in building their own” models is simply wrong. Modern advanced machine-learning models—MAMLMs—are much more than just GPT LLM ChatBots. The general category is very big-data, very high-dimension, very flexible-function classification, estimation, and prediction analysis. 80% of the current anthology-intelligence hivemind share right now is about ChatBots, and only 20% about other stuff. But the other stuff is very important to FaceBook: Very big-data, very high-dimension, very flexible-function classification, estimation, and prediction analysis is key for ad-targeting. And FaceBook needs to keep Llama competitive and give it away for free to cap the profits a small-numbers cartel of foundation-model providers can transfer from FaceBook’s to their pockets.)

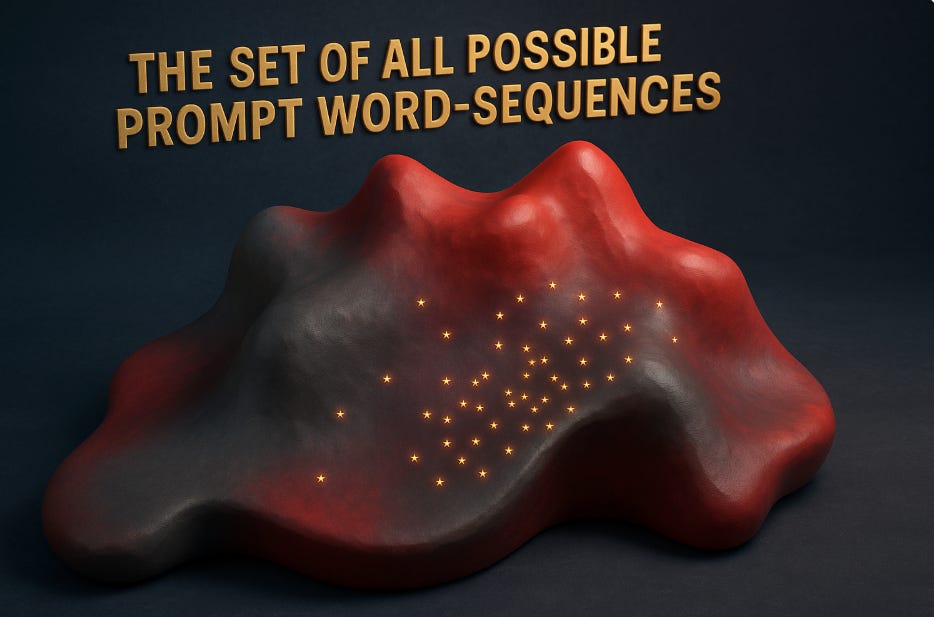

Now I see the GPT LLM category of MAMLMs as limited to roughly spreadsheet—far below microprocessor—levels of impact because I see them as what I was taught in third-grade math to call “function machines”: you give them something as input, and they burble away, and then some output emerges. start with the set of all word-sequences—well, token sequences—that can be said or written.

There is a subset of that that is our training data. Our training data consists of some of those word-sequences, and then the next word that the speaker or writer sets down.

We can visualize it sort of like this:

with the reddish blob being the set of all word-sequences, and the points of light being lur training data

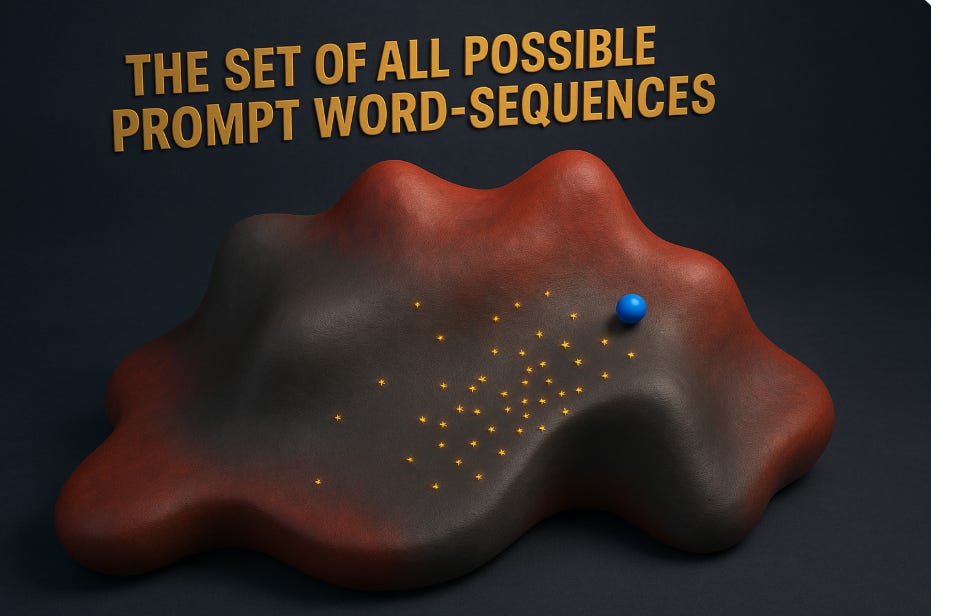

But now we get a prompt for the LLM. And almost all the time the exact prompt is not in the training data. The number of coherent fifteen-word English sentences is more than a thousand billion billion. And the combinatorics explode from there with longer context lengths.

The LLM needs to come up with a next word for this prompt:

So it cannot simply look in its training data and say: that is the next word.

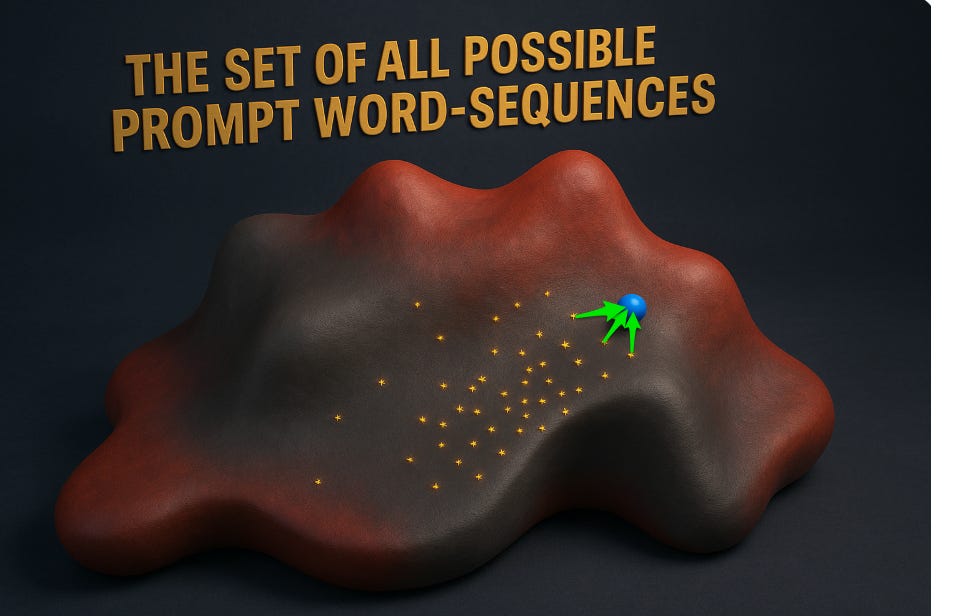

So what does it do instead?

Well, what can it do? What it can do is look at the word-sequences in its training data that are “close” to the word-sequence that is the prompt. And then it can choose a next word (or, rather, can choose a probability distribution from which, after consulting a random number generator, it picks the next word) that is some kind of “average” of the next words from the training-data word-sequences “close” to the word-sequence that is the prompt. It “interpolates” from the training data:

Or, rather, because it is much too small to actually store the training data, from a lossy regularization, smoothing, and compression of the training data. (See Chiang (2023).)

As Shalizi (2023, 2025) puts it:

Cosma Shalizi: ‘Attention’, ‘Transformers’, in Neural Network ‘Large Language Models’ <http://bactra.org/notebooks/nn-attention-and-transformers.html>: ‘What contexts in the training data looked similar to the present context? What was the distribution of the next word in those contexts?… We can hope that similar contexts will result in similar next-word distributions…. Smoothing… will add bias to our estimate of the distribution, but reduce variance, and we can come out ahead if we do it right…. Sample from that distribution…. Drop the oldest word from the remote end of context and add the generated word to the newest end…

Then repeat.

It is in this sense that it is “autocomplete on steroids”. (It is still the case that the best comprehensible introduction to this (for me at least) that I have been pointed to is still Alessandrini & al. (2023).)

Note that from this perspective all the talk of “neural networks”, “electric brains”, “AGI—artificial general intelligence”, and so forth is (largely) a confusing distraction. Google Search with its pagerank was (initially) giving you its estimate of which webpage a typical internet user thinking about these keywords would link to. It was not “AGI”, or even the path to “AGI”. A MAMLM GPT LLM is giving you its estimate of what the typical internet s***poster who had written this particular word-sequence would write next.

Of course, it is much more than that.

For one thing, there are sets of webpages on the internet that are very "close" together and relatively "dense" and in which the view of the typical Internet s***poster is actually quite smart. Thus the machine can make and provide you with a very good estimate of what a reliable authority would write next. And it can be guided into states in which it relies upon sections of the training data that are in fact reliable, and provide substantial insight:

Programming is the canonical case here: very few people have put faulty programs up on the internet as a joke to mislead people.

The idea of “prompt engineering” is the attempt to discover rules-of-thumb about word-sequences you can add to your prompt that will guide the GPT LLM to an answer where the training data was written by smart, informed people saying true and useful things.

The idea of RAG—retrieval-augmented generation—is that an initial keyword search of a restricted set of documents will do much of your prompt engineering for you.

The idea of RLHF—reinforcement learning with human feedback—is that a second layer of human judgment can be applied to to the metric metric by which the GPT LLM judges that word-sequences are “close”, making word-sequences in the training data that were followed by words that are wrong or confusing for our purposes are classified as far away from everything.

For another thing, the results are very, very impressive indeed across a number of dimensions. And every time I read this point, I am again unable to avoid quoting Shalizi (2023, 2025):

Cosma Shalizi: ‘Attention’, ‘Transformers’, in Neural Network ‘Large Language Models’ <http://bactra.org/notebooks/nn-attention-and-transformers.html>: ‘"It's Just Kernel Smoothing" vs. "You Can Do That with Just Kernel Smoothing!?!": [That] takes nothing away from the incredibly impressive engineering accomplishment of making the blessed thing work…. nobody [before] achieved anything like the[se] feats…. [We] put effort into understanding… precisely because the results are impressive!…

The neural network architecture here is doing some sort of complicated implicit smoothing across contexts… [that] has evolved (under the selection pressures of benchmark data sets and beating the previous state-of-the-art) to work well for text as currently found online.… Markov models for language are really old…. Nobody, so far as I know, has achieved results anywhere close to what contemporary LLMs can do. This is impressive...

How can they be so impressive?

This is a problem for me. Because they are doing things that my intuition strongly leads me to think that they should not be able to do half as well as they do.

Now don’t get me wrong. They are good summarization engines. And they have the extraordinary advantage that natural-language interfaces match my linguistic-human affordances perfectly. Still, they cannot reliably pull out author-date references from an article and construct a complete, correct reference list. By now we know, broadly, from experience, what these thing are useful for, and where they fall down. These things can be immensely useful for:

Summarization engines: Summarizing articles and literatures, plus generating code snippets where there are a lot of right answers to nearly equivalent questions on the ‘net.

Boilerplate tasks: Other tasks in which the formulae are nearly fixed in desired result or in which the desired compression is close to something like take-every-paragraphs-most-important-sentence.

Ritual tasks: where what is wanted is not the conveying of new and novel insights, but rather confirmation that some particular milestone has been passed, that some particular checklist has been applied.

Language translation tasks: mapping common patterns across languages.

Idea generation tasks: brainstorming, outlining, or riffing on familiar genres—producing a great many variants of things that have been said in roughly similar contexts so that the human can then judge which are most appropriate in this particular case, and be nudged themself in the direction of generating still better options.

Remember:

MAMLM GPT LLMs have not evolved for accuracy.

Rather, they have been evolved for plausibility to human readers.

MAMLM GPT LLMs are best at generating mass-production equivalent text where there are a lot of closely related examples on the internet.

There is now a word for this: AI-slop.

And always, always, always: check their work. If you cannot immediately tell whether they are hallucinating or not, you have no business using them for the tasks you are attempting to do.

References:

Alessandrini, Giulio, Brad Klee, & Stephen Wolfram. 2023. "What Is ChatGPT Doing ... and Why Does It Work?". <https://writings.stephenwolfram.com/2023/02/what-is-chatgpt-doing-and-why-does-it-work/>.

Chiang, Ted. 2023. “ChatGPT Is a Blurry JPEG of the Web.” The New Yorker, February 9. <https://www.newyorker.com/tech/annals-of-technology/chatgpt-is-a-blurry-jpeg-of-the-web>.

DeLong, J. Bradford. 2025. “MAMLM as a General Purpose Technology: The Ghost in the GDP Machine”. Grasping Reality, June 24. <https://braddelong.substack.com/p/mamlm-as-a-general-purpose-technology>.

Shalizi, Cosma. 2025. “On Feral Library Card Catalogs, or, Aware of All Internet Traditions.” Programmable Mutter, April 17. <https://www.programmablemutter.com/p/on-feral-library-card-catalogs-or>.

Shalizi, Cosma. 2023, 2025. “‘Attention’, ‘Transformers’, in Neural Network ‘Large Language Models’. Notebooks, April 24. <http://bactra.org/notebooks/nn-attention-and-transformers.html>.

If reading this gets you Value Above Replacement, then become a free subscriber to this newsletter. And forward it! And if your VAR from this newsletter is in the three digits or more each year, please become a paid subscriber! I am trying to make you readers—and myself—smarter. Please tell me if I succeed, or how I fail…

#spreadsheet-not-skynet

#subturingbradbot

#dia-browser-testbed

What Brad/Cosma's explanation of LLM misses (along with most others) -- the reason why Brad's intuition "strongly leads [him] to think that they should not be able to do half as well as they do" -- is the concept of **faithful representation**. Put simply: Reality allows itself to be mapped in a low-dimensional latent space, and some compression schemes are just **good mappings**. [Or at least (the instrumentalist view), of humanity's currently-known shared ways to describe and predict our observations of reality.]

This is why our scientific and statistical models work in the first place. Brute-force ML works because (or to the extent that) it finds these mappings. LLMs work because their training process finds the same latent representations as (or equivalent to) the representations that internet sh*tposters have in their heads as they're writing [1]. Therefore, they can quite accurately predict what a sh*tposter would have said, in pretty much any context.

Yes, this is just Plato's concept of "carving nature at its joints" from ~2400 years ago. Quite surprisingly, it turns out that we're all living at the unique moment in humanity's history where we are discovering that such a carving is an objective possibility, and that we can, for the first time, automate this carving [2, 3]. And this does not just apply to language, BTW: if the success of multimodal models weren't enough, tabular foundation models [4] demonstrate that good old statistics has the same property.

There is still the crucial question of whether/when, beyond "just" the latent representations, ML can learn the true causal world model: which latent states at t cause which latent states at t' > t. This is, in a technical sense, much harder than learning temporal correlation, and at least in some cases, it's **impossible** to learn just from the data, requiring the capability to intervene/experiment [5]. However, it has recently been proven that learning a good causal model is a strong requirement for robust behavior -- ie, reliable extrapolation beyond the data [6].

We've been lucky so far that the Internet already has a lot of natural-language descriptions of causal models of just about everything. These can be compressed into "meta-representations" that let LLMs "interpolate extrapolations". This is similar at some level to how humans learn much of their own extrapolation capability -- not by experimenting themselves, but by learning theory from other people and representing it in their heads as "little stories" that they can interpolate.

However, because these meta-representations can't be directly grounded in observational data, the way LLMs learn them is very sensitive to the quality of the training corpus. That is why stuff like embodied learning and synthetic data are hot topics in AI: This is stuff you want to get *exactly right*.

Regardless, "just" getting the representation right is a huge step in the direction, and it goes a long way to explain/justify the conceptual leap from "just statistics on steroids" to "AGI" made by LLM stans.

[1] https://arxiv.org/abs/2405.07987

[2] https://arxiv.org/abs/2505.12540v2

[3] https://aiprospects.substack.com/p/llms-and-beyond-all-roads-lead-to

[4] https://www.nature.com/articles/s41586-024-08328-6

[5] https://smithamilli.com/blog/causal-ladder/

[6] https://arxiv.org/abs/2402.10877

A couple of weeks ago I was opening up a stub of a paper I'm working on, a PDF of not fully 8 pages. Acrobat informed me that, "This looks like a long article. Would you like me to summarize it?"

I was appalled that it treated an 8-page piece of writing as "long," but curious what it would come up with for a summary.

Mostly good, but also got one key idea wrong.

If I didn't know the writing (having, after all, written it), I wouldn't have known how much of the summary was on-target and how much was eroneous. Simply knowing that some of the info is incorrect doesn't help - to figure out which is the incorrect stuff, you have to go in and read the article. And then the point of the summary was...?

I suppose one could say that the standard shouldn't be perfection, but rather, is the AI doing better than a graduate research assistant. My college is undergrads only, so I don't have any experience with evaluating the work of a graudate research assistant.