Current "AI" Is Three Things: Less Inhuman & Less Stupid ChatBots, High-Dimensional Nonlinear Regression & Classification, & a Way to Separate Gullible VCs from Money; & BRIEFLY NOTED: For 2023-02-11

“A Young Lady’s Illustrated Primer” continues to recede into the future, as, currently, "AI" continues to prove extremely difficult. But right now Microsoft appears to have a very different judgmen...

“A Young Lady’s Illustrated Primer” Continues to Recede into the Future…

In my experience, “AI” today is three things:

less inhuman & less stupid chatbots,

high-dimensional nonlinear regression & classification, &

a way to separate gullible VCs from money.

But, right now, Microsoft appears to have a very different judgment—and Google is panicking. Or is it just that Microsoft thinks, perhaps rightly, that better chatbots are enough to commoditize everything Google does that is profitable?

But a sub-Turing instantiation of a human or a human-like mind? Not yet. Not yet at all, as best as I can tell.

Of the four hours of my Friday workday that I did not spend in meetings, I spent one hour doing extra stretching—to try to keep the sciatica merely handicapping and pain-inducing (rather than disabling and agonizing)—one hour actually doing my job and revising syllabi, and two hours beating Python with the sand-filled rubber hose to induce it to run BradBot: the Brad DeLong ChatBot fueled by my weblog archives and powered by Open AI's davinci GPT.

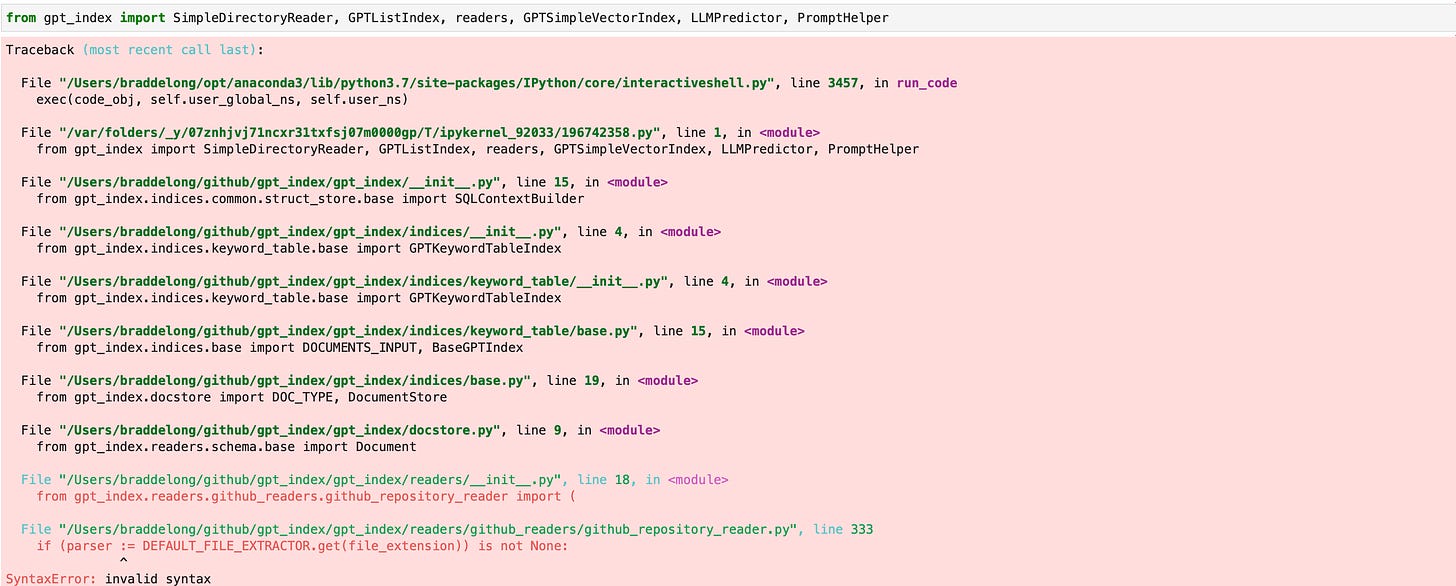

The **exact same** cut-and-pasted Python code runs fine on Google CoLab, but on my machine it barfs up:

Go figure.

"Restless sleeping balrogs deep in the Python kernel" seems as good an explanation as any.

But I did get it running, following the recipe by Ben of Every and using gpt_index by , somewhere.

However, it started hallucinating on the first question I asked it:

Brad: What is the hexapodia podcast?

BradBot: The hexapodia podcast is a podcast hosted by DeLong ChatBot that focuses on topics related to robotics, artificial intelligence, and other cutting-edge technologies.

As I vaguely and probably inaccurately understand what these models do, they have:

layers that turn, by some fitted strongly nonlinear process, the input word-token sequence into a vector in a very high-dimensional vector space

layers that essentially say "what pattern of words is, in my training data set, likely to follow what I have identified as the input vector?"

If so, then my inclusion of the word "hexapodia" in the initial prompt threw BradBot a lethal curveball. It led BradBot off into a very weird corner of the space of language instances, a corner centered, of course, around Vernon Vince's A Fire Upon the Deep., as the overwhelming source in the training dataset of text containing “hexapodia”

You know, I am kinda surprised that it did not reply: the hexapodia podcast is hosted by the Blight, a malevolent superintelligence located in the near Transcend...

That being said, the current version of the tools for quick-and-dirty ChatBot creation are not up to the degree of snuff that I think I need for them to be useful. I need to be able to query my weblog archives, and have the ChatBot—the BradBot—tell me what conclusions I reached decades ago about some issue, and what I need to read to quickly bring myself back to my long-ago peak of smartness about it—and then tell me what related things I have written that are likely to be relevant to my current concerns.

The problem is that the gpt-index road that people are using will (a) search my weblog archives for things in the prompt, and then (b) feed the prompt augmented by hit passages from my weblog archives to GPT. Thus what I will get back is some version of the typical human reaction in the training dataset to the word-token sequences in my weblog archives identified as relevant. And that—even without the hallucination problem—is not good enough. It seems to be not “past me speaking with wisdom”, but rather “conventional wisdom trying to summarize and react to something past me once wrote that might be relevant”.

If I had a time machine, I would go back 25 years and start including a question-and-answer catechism section at the end of every weblog post…

Dan Shipper: How to Build a Chatbot with GPT-3…

Jerry Liu: Welcome to GPT Index!…

Andrej Karpathy: Let's build GPT: from scratch, in code, spelled out…

Neal Stephenson (1995): The Diamond Age (New York: Bantam Books) <https://archive.org/details/diamondage0000step_w0c7>…

Vernor Vinge (1992): A Fire Upon the Deep (New York: Tor Books) <https://archive.org/details/fireupondeep0000ving>…

ONE VIDEO: Andrej Karpathy: Let's Build GPT: From Scratch, in Code, Spelled Out:

MUST-READ: I Assure You: Þe Videos of Me & My Wife in the Moscow Ritz-Carlton Are Very Boring:

Charles Leerhsen: Whatever Happened to Jeffrey Sachs?: ‘As the chair of... The Lancet’s COVID-19 Commission, Sachs... ventured into the area of the virus’s origins. Most physicians and immunologists have maintained from the start that, while nobody knows for certain how the bat-borne virus first entered the wider world, the most likely scenario by far was that it came from a “wet market” in Wuhan, China. Sachs’s Lancet report acknowledged the need for further study but seemed to lean toward the possibility that the coronavirus originated in a Chinese-government laboratory.... Many... are inclined to think the Wuhan connection is just too much of a coincidence to summarily dismiss, and that the coronavirus might have accidentally hitched a ride out on somebody’s skin, hair, or clothing. But Sachs couldn’t leave it at that.... Instead, he started what sounded like a one-man game of telephone, changing his story each time he repeated it, going quickly from “It was possible” to “It was odds-on” there had been a lab leak—and that Dr. Anthony Fauci, the former chief medical adviser to the president, and many other scientists were lying about it. And why were they lying? Because, Sachs said, over howls of protest from the alleged fabricators, they had been involved in secret “gain-of-function” research meant to strengthen the virus so it could be better used in bio-defense....

An A.I. chatbot could not engineer more obnoxious-sounding beliefs than Sachs has expressed on the Ukraine conflict, even as Russian missiles slam into apartment houses in Kharkiv—namely that “the West” is responsible for provoking Russia’s violent invasion (just as it was in the now largely forgotten Ukraine offensive of 2014) and that President Volodymyr Zelensky’s proposed peace terms are “nonsense.” As one of his academic peers told me, “I used to respect Sachs even if I disagreed with him, but now it’s just weird to watch him justify naked aggression”...

ONE IMAGE: Earthquake Aftermath in Turkey:

Very Briefly Noted:

Dan Shipper: How to Build a Chatbot with GPT-3…

Jerry Liu: Welcome to GPT Index!…

Neal Stephenson (1995): The Diamond Age (New York: Bantam Books) <https://archive.org/details/diamondage0000step_w0c7>…

Vernor Vinge (1992): A Fire Upon the Deep (New York: Tor Books) <https://archive.org/details/fireupondeep0000ving>…

Mohamed El-Erian: There Is More Inflation Complexity Ahead: ‘Three possibilities stand out… “immaculate disinflation… inflation… sticky… at 3-4%… [or] prices head back up… [with] a fully-recovered Chinese economy and the strong US labor market…. I would put the probability of this outcome at 25%…

Michael Kosinski: Theory of Mind May Have Spontaneously Emerged in Large Language Models : ‘Models published before 2022 show virtually no ability to solve ToM tasks. Yet, the January 2022 version of GPT-3 (davinci-002) solved 70% of ToM tasks, a performance comparable with that of seven-year-old children. Moreover, its November 2022 version (davinci-003), solved 93% of ToM tasks, a performance comparable with that of nine-year-old children...

Charlie Warzel: Is this the week AI changed everything?: ‘Clippy, it appears, has touched the face of God...

¶s:

Vasso Ioannidou, Sotirios Kokas, Thomas Lambert, & Alexander Michaelides: Governor appointments and central bank independence: ‘The negative relation between inflation and de jure central bank independence breaks down when we extend the sample to more recent years or/and a larger set of countries, [but] the negative relation between inflation and central bank independence sustains if we use de facto independence, as measured by our governor appointment index…. The mean deviation between inflation and the inflation target has a zero correlation with de jure central bank independence but an economically significant negative correlation with de facto central bank independence...

Jacky Liang: The Impact and Future of ChatGPT: ‘ChatGPT and more capable AI code assistance will force a fundamental reformulation of the abstraction levels that software engineers operate on. Most software engineers do not need to reason about low-level machine code, because we have very powerful compilers…. It's likely that coding AIs will act as the new “compilers” that translate high-level human instructions into low-level code, but at a higher abstraction level. Future software engineers may write high-level documentation, requirements, and pseudocode, and they will ask AI coders to write the middle-level code that people write today…. I don’t see software engineers getting replaced by AI as much as being pushed up the value chain…. The second-order effects… 1) unlocking completely new capabilities and 2) lowering the cost of existing capabilities that they all of the sudden make economic sense. An example for 1) is how now we can add a natural-language user interface to any software by simply letting an AI coder translate language instructions into code that calls the APIs of that said software…. It’s honestly hard to imagine developing a new app in the era of LLMs without incorporating a language-based UI. The bar for entry is so slow (just need to call a publically accessible LLM API), and if you don’t do it, your competitor will and will deliver a superior user experience…

Tim Harford: Why chatbots are bound to spout bullshit: ‘Some of what they say is true, but only as a byproduct of learning to seem believable.... Asked… “What is the most cited economics paper of all time?” ChatGPT said that it was “A Theory of Economic History” by Douglass North and Robert Thomas, published in the Journal of Economic History in 1969 and cited more than 30,000 times since... “considered a classic in the field of economic history”…. The paper does not exist…. [Because] the citation is magnificently plausible. What ChatGPT deals in is not truth; it is plausibility. And how could it be otherwise? ChatGPT doesn’t have a model of the world. Instead, it has a model of the kinds of things that people tend to write…

Robert Farley: Disgrace of the Nation: ‘This account of how CJR stiffed an expose on the Nation’s decision-making with respect to Russia and Syria is sickening:... "As Pope killed my report, he revealed that he had throughout been involved in an ambitious and lucratively funded partnership between the CJR and The Nation, and between himself and vanden Heuvel."... The upshot of all this is that Katrina vanden Heuvel, along with her late husband, Stephen Cohen, has played an incredibly toxic role in progressive American journalism over the past decade, especially with respect to any attempt to accurately depict Russian behavior. It is well past time to cut vanden Heuvel out of any respectable progressive journalistic institutions. She personally has played a poisonous role in the national conversation about America’s relationship with Russia. For journalists contemplating working in progressive spaces, the injunction should be clear and straightforward: If Katrina is involved, you most certainly should not be…

Ioannidou et al: "Inflation" or deviations, positive or negative, from target inflation?

I don't know which annoys me more a) assuming control of inflation means only overshooting targets is bad or b) control of deficits means only reducing expenditures.

Very sorry to learn about your sciatica. My wife has the same issue: an hour or more a day of exercises "merely handicapping and pain-inducing (rather than disabling and agonizing)". She gets physiotherapy too!