How Much of an Information-Technological Revolution Is Now on Our Doorstep?

A piece I wrote for Nikkei <https://www.nikkei.com/article/DGXZQOCD2536V0V21C23A2000000/>, published in Japanese...

A piece I wrote for Nikkei <https://www.nikkei.com/article/DGXZQOCD2536V0V21C23A2000000/>, published in Japanese…

Nine months ago New York Times columnist Ezra Klein wrote, with respect to the onrushing approach of the latest revolution in information technology in the form of large-scale neural-network machine-learning model systems like OpenAI's ChatGPT4:

Sundar Pichai... of Google ... [is] not... known for overstatement... [but] said, "A.I. is probably the most important thing humanity has ever worked on. I think of it as something more profound than electricity or fire".... Perhaps the developers will hit a wall they do not expect. But what if they don't?... There is a natural pace to human deliberation. A lot breaks when we are denied the luxury of time.... We can plan for what we can predict (though it is telling that, for the most part, we haven't). What's coming will be weirder.... If we had eons to adjust, perhaps we could do so cleanly. But we do not...

The fear is that we, as a species and as a society, are not equipped for and cannot properly deal with the rapid changes and the resulting upsets in how we work, live, and think that new technology is going to bring to us. Thus we are not prepared to handle it well, and there is the possibility that it may turn out to trigger some form of societal or human catastrophe.

This fear on Ezra Klein's part is entirely rational. And it is, I believe, correct.

But what the extremely intelligent Ezra Klein misses, I believe, is that this has been the case for us human beings ever since 1750 or so.

The Kingdom of France in the late 1700s was the most powerful and one of the richest, most secure, and productively organized in the world. The landowners, courtiers, bureaucrats, and military commanders who made up its aristocracy sat secure in their seats. They had no idea that the then-ongoing shifts in technology and society involved in the transition from feudal-agrarian to contractual-commercial society would lead to the political upheaval that was the great French Revolution of 1789-1804. And the pace of technological change then was much less than the one we have gotten accustomed to since at least the days of our great-grandparents.

You in Japan know this very well. The régime established under Tokugawa Ieyasu in common year 1603 attempted to hold back the coming of economic and societal change to the extent possible, and succeeded to a remarkable degree until 1854. But the consequence was that every generation since the people of Japan have lived in a new and different economy underpinned by different and much more productive technologies—a doubling of the typical citizen of Japan's ability to productively produce via manipulation nature and coöperating with other humans each generation. And each generation since 1854 has seen Japan's leaders and citizens try to rework the pattern of society and economy in order to benefit from greater capabilities and higher productivity.

The road for Japan has been, at times, very rough. The worst stretch of all—for the people of Japan and of the territories its government sought to include in an empire—came in the later stages of the stretch over 1895-1945, when Japan suffered from a bad case of the imperialism-nationalism mind virus it caught from the Western European empires. But as of now every observer must be more satisfied with the current configuration of Japan's economy and society than with any previous one. The citizens and leaders of Japan have managed to take very good advantage of the opportunities to positively rework economies and societies opened up by technological advance.

Thus the task of productively managing the onrushing approach of the latest revolution in information technology and using our enhanced capabilities for good should not be beyond us: we know how to do this—as long as the pace of technological advance is not about to become vastly greater than it has been over past generations, and as long as our systems of adaptation remain as strong as they have been. So those are our two questions that we must answer.

Is the overall pace of technological change arising from the latest revolution in information technology vastly greater than the pace of change in the past? My judgment, at this point, is that the answer is almost surely "no". Economic statistics and forecasts tell us that the pace of technological change over the past decade and into the future are, if anything, somewhat slower than Japan has experienced on average since 1853. And an examination of the pieces of the onrushing latest wave of information-technology revolution reinforces that judgment. I count seven aspects in the latest wave:

"Copilots" that suggest the next step.

Natural-language interface to databases.

Verbally-expressive software simulations of coaches, companions, and pets.

Very large-scale very high-dimension regression and classification analysis.

The intersectional combination of (2) and (4) surveying the internet, in the form of ChatGPT, DALL-E, and so on.

A way of separating over-optimistic investors from their wealth.

The latest wave of American religious enthusiasm.

The first of these is likely to be the biggest. Indeed, we can already see how computer programmers and intensive users will benefit from it as it is already being implemented in the form of Github Copilot. Tools that sit at your side and suggest the next operation you will wish to perform, which you implement by pressing the <tab> key will provide another upward leap in programmer and intensive user capabilities akin to those generated by earlier frameworks and abstraction layers. Figure that over the next two decades we will obtain a doubling of productivity for the 5% of the workforce that most intensively use computers—that is an 0.25%-point increase in the annual rate of economy-wide technological progress.

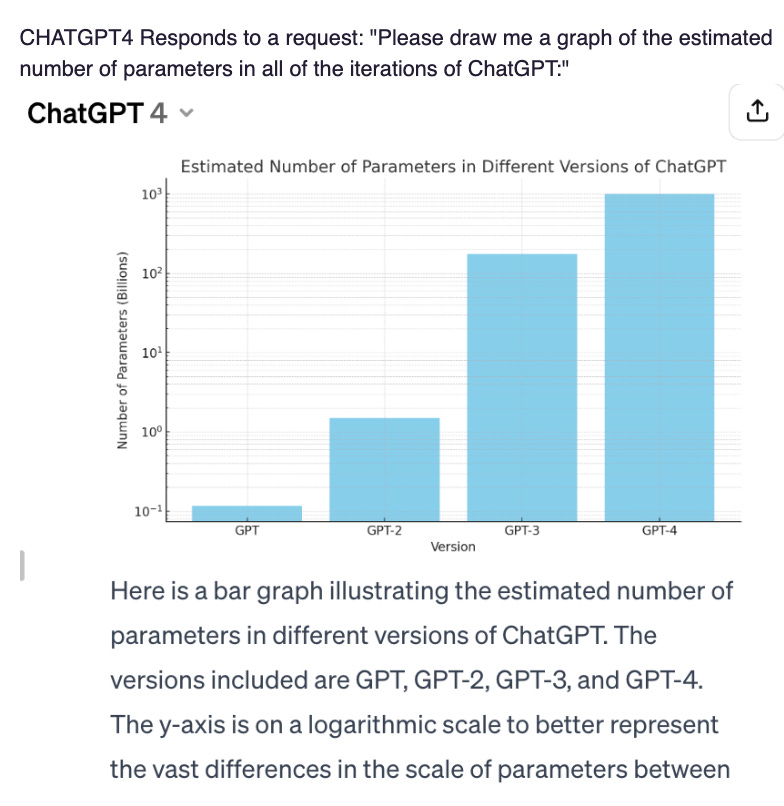

The second is likely to be of an equal magnitude. We communicate, learn, and inform via natural language. ChatGPT4 and its ilk are now good enough to simulate a conversation to the extent that they will soon be able to reliably populate databases in response to human input and draw from databases in order to generate useful language-structured output for humans. These interactions will not be conversations—while there is a human mind on one side, there is no mind at all on the other. But with humans designing the databases backing up the ChatBots, these interactions will be a vast improvement over what we have now.

The fact that the conversations will be "good enough" will bring transformations in human society. We have long talked to our pets and our imaginary friends. We have all benefited from interaction and feedback from our coaches. But, in the future, for the first time, our pets and our imaginary friends will answer us back—we will not have to imagine what they would say. And, for the first time, a first-class coach will be available to everyone for free. This may not be for our unalloyed good. But our negative experiences with the social-media drawbacks of corporations incentivized to capture our attention simply to show us ads should teach us lessons that will allow us to inoculate ourselves.

By the time we reach the fourth, we have benefits that, while still substantial, are smaller. Our ability to classify, measure, and predict is already great. Yes, further improvements in machine learning will allow Apple Computer to not just label that a picture contains a dog but correctly label which of my dogs is in which picture, making searching my photo database somewhat easier. And this capability—and all the other better fine-tuning of classification, measurement, and prediction—will enrich my life. But this is at the margin.

But at this point we have, I think, reached the end of the useful contributions the next information-technology wave has to offer us. Language- and image-generation models trained on the entire internet have made a huge public-relations splash, but they are unreliable as sources of information and insight, for what you get out of them is close to the energy you have to put into them to fine-tune them for accuracy. The wholesale move into "AI" of the speculators and projectors who two years ago were telling us that crypto would carry us to the moon is likely to be a net minus for society. And the worries that we are building our software-robot overlords seems to me to be yet another version of California Spiritualism, which is itself a descendent of American patterns of inventive religious thought we have seen regularly since the First Great Awakening of Jonathan Edwards and George Whitefield in New England in the 1730s.

However, add all these up, and they promise to be a significant plus for humanity. We can guesstimate that the next wave of information technology will drive a boost to the rate of advanced-country technological progress in the range of 0.5%-points to 1.0%-points per year over the next 20 years. That will be very welcome, especially as we must somewhere find the resources to begin cushioning and compensating people for the losses that will be generated by global warming. But that is not enough to make the pace of change unmanageably fast—except to the extent that the pace of change has already been, since 1854, unmanageably fast.

Are there big minuses? The big fear is that this will be the first time that technological change threatens to disemploy well-paid white-collar workers rather than blue-collar workers, and that will cause much more societal upset than did previous waves. I, however, remember that the Great Depression of the 1930s taught middle classes in industrial countries all around the globe that their prosperity was precarious, and that they had powerful interests in common with lower-paid working classes. And I remember that the generation that followed was one of enormous relative industrial-country societal harmony.

Thus while I agree that this infotech wave is likely to bring some precarity to those who had been comfortable and privileged in their white-collar status, governments can manage precarity. The principal block to its management has, since the coming of the Neoliberal Order around 1980, been a refusal of the comfortable to take it seriously. As is often the case, the middle classes and the professional classes do not see themselves as having anything in common with the working classes.

But there was a time—in the aftermath of the Great Depression and World War II—when reality had taught the middle classes and the professional classes that they had a lot in common with the working classes. And that time was the best time in human history, at least as far as economic growth and political harmony in the Global North were concerned.

So perhaps there is an upside here, once we take this feedback into account.

As a very long time software architect, I think folks over-estimate the effect of CoPilot. Tools have existed in IDE for "code completion" for a long time and CoPilot is really just the follow-on in that area.

That said, a lot of "software engineers" (i.e the programmers who aren't really engineers) have rarely used code completion and other IDE capabilities very well, so maybe that class of developers will improve (good thing).

But most of the real issues with software development productivity have a lot more to do with bad engineering, changing requirements, mismatch with customer needs, etc, etc. than the ability to get a better code completion. Programming is the "easy part" of being a software engineer.

I think you are underestimating the potential power of ML/AI to have significant multipliers in the way we do things, from the consumer to the industry. One reason is that you are rather fixated on this latest shiny ML - LLms and their boosters promising the moon. As you linked to an excellent article by Dave Karpf who was more cautious in his assessment of LLMs (rightly I believe) you are perhaps seeing just the trees, not the forest. Admittedly, I am more of a techno-optimist, but I see more of the range of AI tools and how they might come together. The early history of AI was much more about solving various logical and planning problems using symbolic logic. We progressed to hand-crafted, rule-based tools to solve complex problems that worked reasonably well but were expensive and time-consuming to produce. Then we saw the birth of machine learning that allowed any table of data to be quickly translated into various ML models, like decision trees. But all that got largely derailed when ANNs, particularly large ones got a huge boost from Geof Hinton, and pattern recognition of data, particularly images, got a major improvement and we were off to the races. LLMs seem to have largely solved many pattern problems including blowing Turing's "Imitation Game" test out of the water, even fooling smart people that a computer with an LLM was sentient.

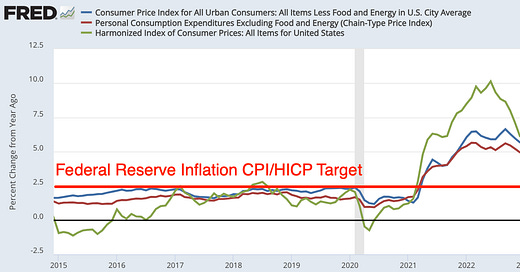

Some demos of the capabilities of LLMs were quite impressive, but not so impressive when investigated by academics. The public has now tested various iterations of LLMs and it is clear that they have serious limitations. Hence Karpf et al are probably right that LLM tools will be more like spreadsheets and wordprocessors in productivity gains, and especially with knowledge workers. Interrogating the FRED database verbally and getting exactly the charts with post-processing done on the data to combine series with equations and output the appropriate charts is going to really help anyone who uses such databases, especially if the data needs to be acquired from different data sources and combined in creative ways.

But when we go back to earlier AI tools, we get to see how combining them with LLMs might well make huge productivity gains. All the tedious artisanal care in doing so many chores might no longer be needed, allowing greater democratization of the tasks.

Take as an example 3D printing, which not so long ago was the toy-du-jour for hobbyists and industry, promising to allow everyone to make stuff on demand using available models. Well, that hasn't quite worked out as any forum about 3D printing indicates. It is temperamental just to print an available model, and those models need to be built in various ways. Complex organic-shaped models like humanoids need to be scanned and then worked on to create good model files. But imagine if the models could be built much more simply, and the printers imbued with AI to overcome errors and correct the parameters for printing. Now that 3D printer works almost as easily as a 3D printer, and the model files created verbally or tweaked from a vendor are produced quickly or obtained quickly. For example - take a pic of a broken part, and ask the AI to locate the part as an image, file, or model and then print it. Or perhaps make a model of person X, attired in clothes from Y, color it appropriately, and then print the model to be Z cm tall. Or perhaps design a part to solve this problem and print it with the appropriate filament/resin/other. Such a step change in use would make 3D printers as ubiquitous as microwave ovens, or at least as air-fryers. Cannot do it at home? Then local shops can produce the print more quickly and at a much lower cost than a machine shop. Or perhaps a large print shop doing 2D and 3D printing can design and output an object in hours, or while you wait. Additive printing of rocket engines while much faster and cheaper than using traditional methods still needs considerable careful design work. What if the AI takes your basic specs and does the design work for you, modeling all the performance based on the materials and design and iteratively produces a new engine within a week, allowing even a relative novice to have a rocket engine or other technological artifact on demand? Same with computers and electronic parts. No need to outsource your idea to a production shop in China, just have the computer do all the work and have it made locally, perhaps even on your desktop.

While perhaps not productivity-enhancing of the economy, just the medics, what about AI designing replacement parts for sick or injured people? Life-enhancing as well as the [potentially dangerous] applications of designer organism technology. All these ideas, a fraction of what might be possible, use AI tools as a glue to combine technologies to create a far greater range of artifacts, machines, living, or artistic, and place this power in the hands of the individual, or the locally trained artisan.

Bruce Sterling once wrote an article about technology and the economy in 2050. He suggested that every new idea would have many others waiting in the wings if the popular idea failed for some reason. There would no longer be excitement with the new because there would be an abundance of similar ideas to choose from. This is not unlike the ennui I get with each new computer language announced these days. Yawn.

In a sense, these are tools that do seem like spreadsheets on steroids. But I see them as much more, invading the physical world, not just the virtual one. AIs could be the agents creating new ideas, rather than humans. Perhaps it would be a "Midas Plague" but I think that the potential for uplifting humanity with "technology that is indistinguishable from magic" might be a huge game changer if done right. A person in that future would look back at our period with horror, thankful that the dreadful burdens and limitations of our time were nearly banished. [Realistically society would face different problems, and maybe the AI assistants and tools would not eliminate the complexity of the society, just replace it with new problems. But at least it would be a richer world.]